By Thomas A. Smith | January 2018

By Thomas A. Smith | January 2018

Geophysical Insights staff work in a variety of depositional environments across the globe. We're delighted to be a trusted partner in your AI journey, whatever the challenge. Please schedule a brief get-to-know you call using Calendly below, where we can dive into your specifics.

A great way to start with adopting AI for your team is to define a reasonable objective that addresses current challenges. In the case of applying the Paradise AI workbench to reservoir characterization, those challenges will be geologic and related to seismic interpretation. Here are some key questions you may want to consider as a guide to your adoption process:

Geophysical Insights offers initial AI adoption consultations to help address the above and other questions. Click here to schedule your 30-minute AI Discovery Call.

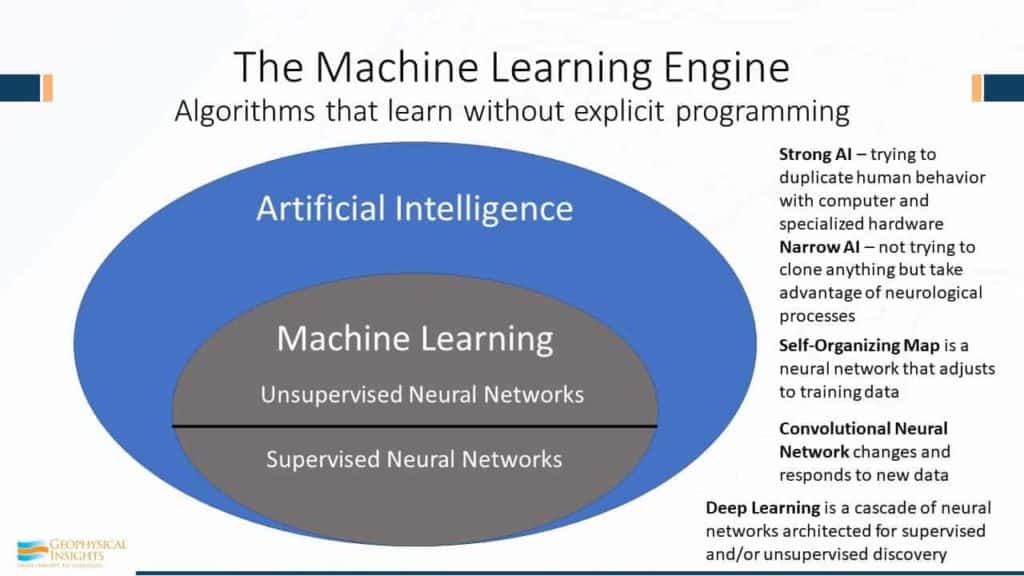

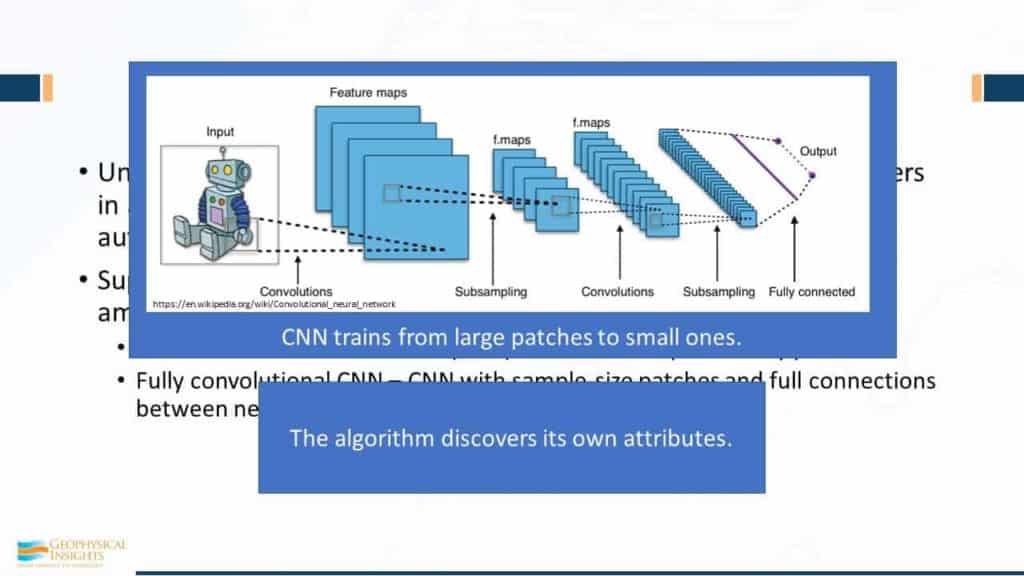

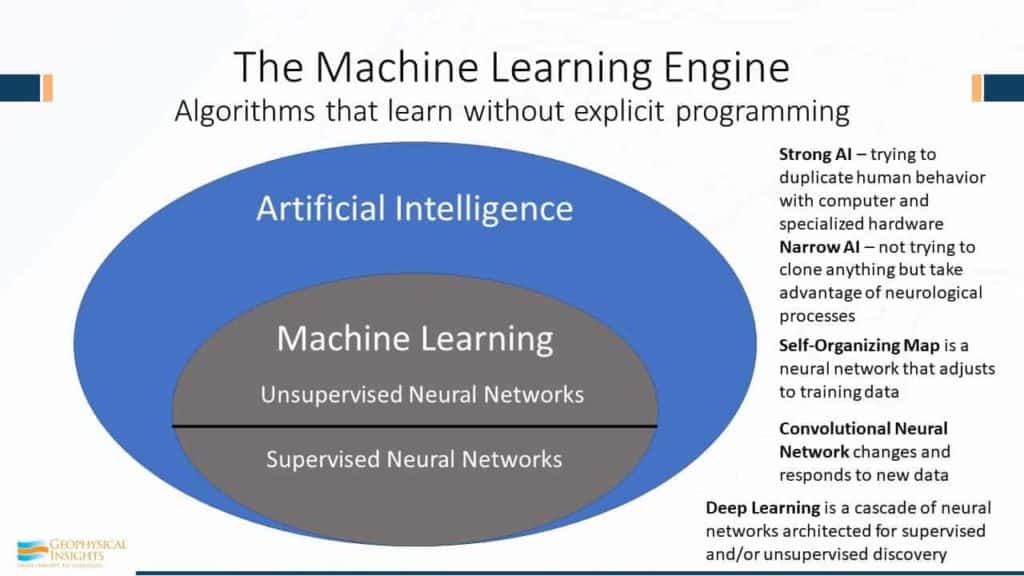

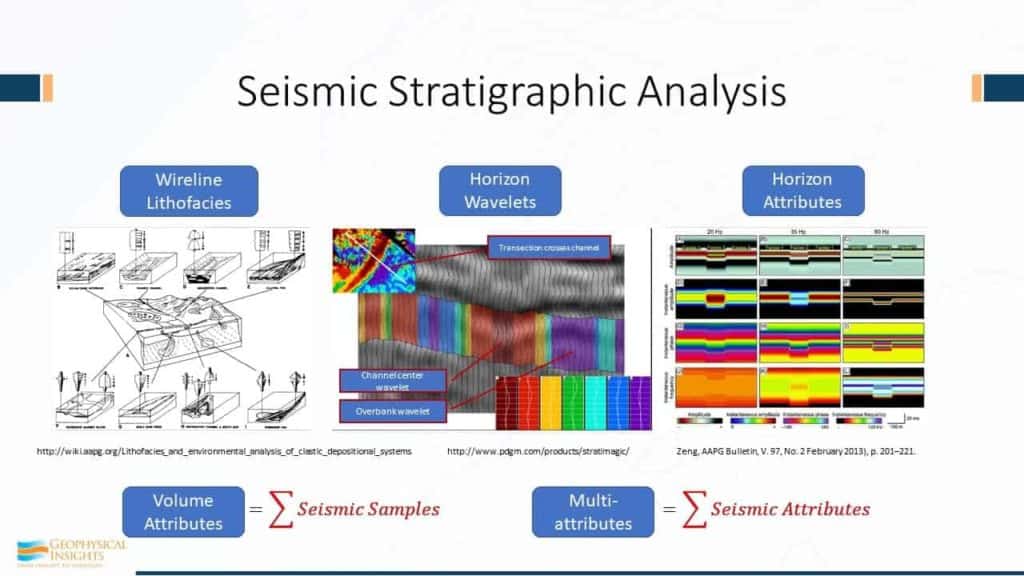

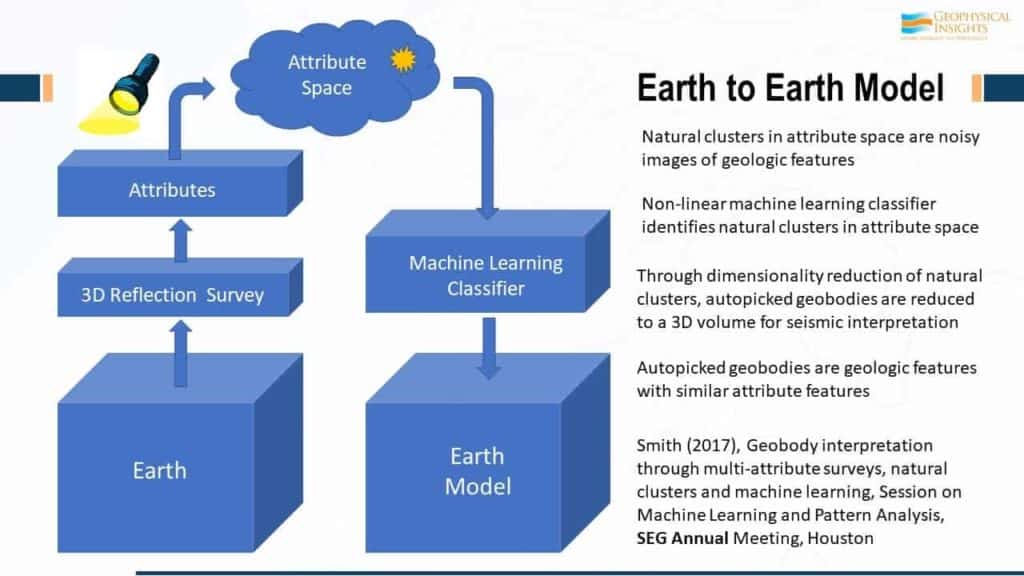

In seismic interpretation workflows, AI is a general class of technologies that include machine learning (ML) and deep learning (DL) applications. Commercial, off-the-shelf tools like those in the Paradise AI workbench are fit-for-purpose, meaning they support specific geologic investigations. For comparison, Python is a programming environment that offers ML and DL processes. Large language models (LLMs) like ChatGPT can be used to generate Python code, among many other functions. Developers often use Python and ChatGPT together to prototype applications. In Paradise, ML, DL, and data analytic applications are pre-built and organized in workflows (ThoughtFlows®) that address out-of-the-box solutions not requiring programming, such as Stratigraphic Analysis, Lithofacies Prediction, and Fault Detection.

Jan is a geophysicist with a 30+ year international track record, including 20 years with Schlumberger, 4 years with Weatherford, and recent years actively involved in Machine Learning for both oilfield and non-oilfield applications. His work includes developing solutions and applications around transformer networks, probabilistic Machine Learning, etc. Jan currently works as a technical consultant at Geophysical Insights for Continental Europe, the Middle East, and Asia.

Mike began his career at SRC a consultancy formed from ECL where he worked extensively on seismic data offshore West Africa and the North Sea. Mike subsequently joined Geoex MCG where he provides global G&G technical expertise across their data portfolio. He also heads up the technical expertise within Geoex MCG on CCUS and natural hydrogen. Within his role at Perceptum, Mike leads the Machine Learning project investigating seismic and well data, offshore Equatorial Guinea.

Tim has a BA in Physics from the University of Oxford and an MSc in Exploration Geophysics from Imperial College, London. He started work as a geophysicist for BP in 1988 in London before moving to Aberdeen. There he also worked for Elf Exploration before his love of technology brought a move into the service sector in 1997. Since then, he has worked for Landmark, Paradigm, and TGS in a variety of managerial, sales, and business development roles. Since 2018, he has worked for Geophysical Insights, promoting Paradise throughout the European region.

We present results of a multi-attribute, machine learning study over a pre-salt carbonate field in the Santos Basin, offshore Brazil. These results test the accuracy and potential of Self-organizing maps (SOM) for stratigraphic facies delineation. The study area has an existing detailed geological facies model containing predominantly reef facies in an elongated structure.

Rapid advances in Machine Learning (ML) are transforming seismic analysis. Using these new tools, geoscientists can accomplish the following quickly and effectively:

Join us for a ‘Lunch & Learn’ sessions daily at 11:00 where Dr. Carolan (“Carrie”) Laudon will review the theory and results of applying a combination of machine learning tools to obtain the above results. A detailed agenda follows.

Agenda

Automated Fault Detection using 3D CNN Deep Learning

Demo of Paradise Fault Detection Thoughtflow®

Stratigraphic analysis using machine learning with fault detection

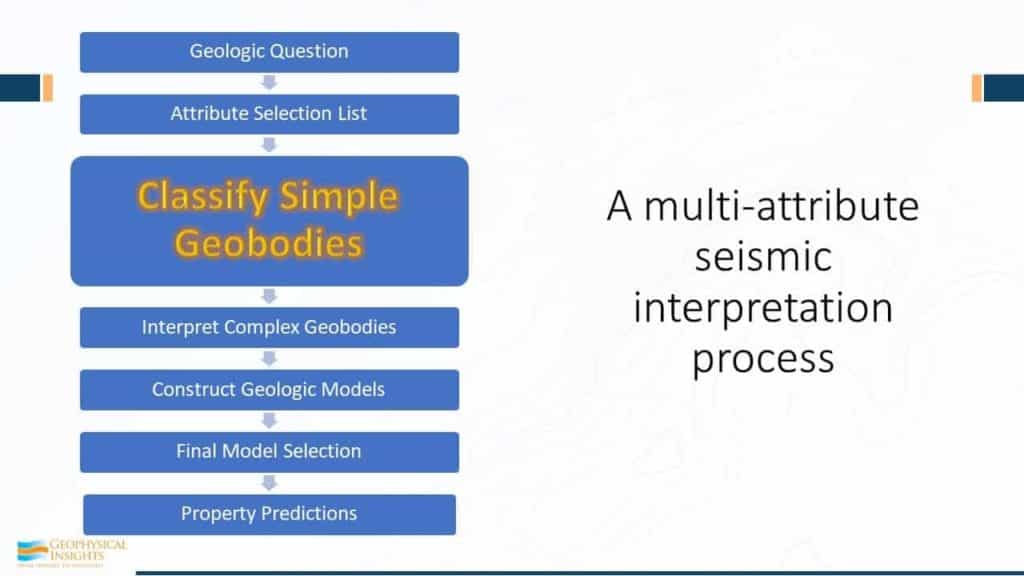

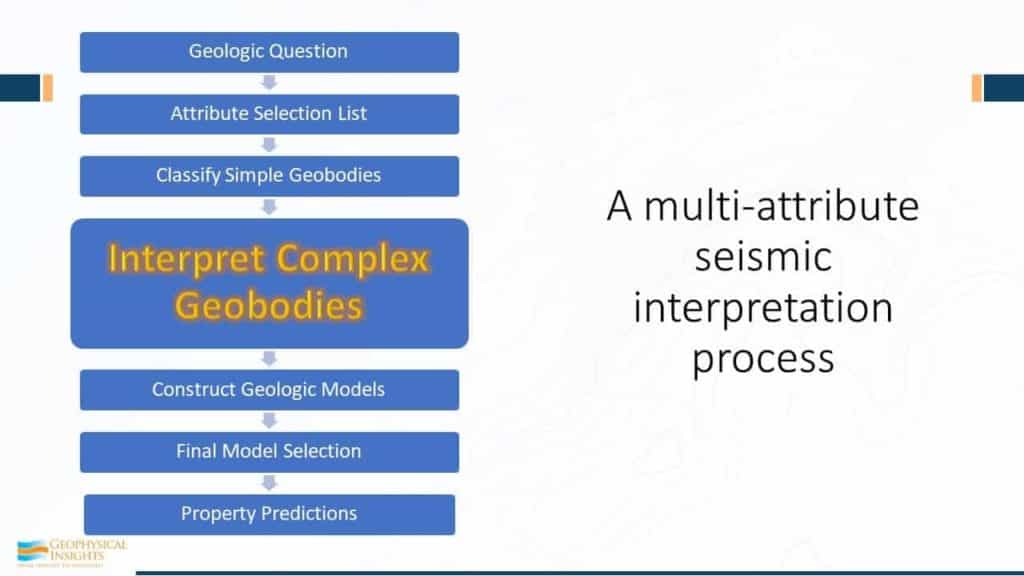

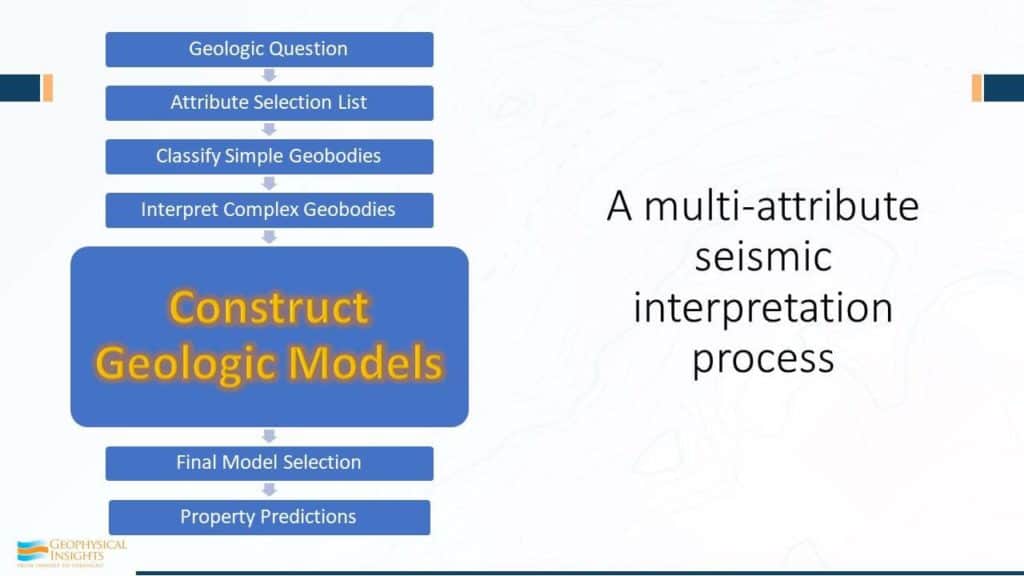

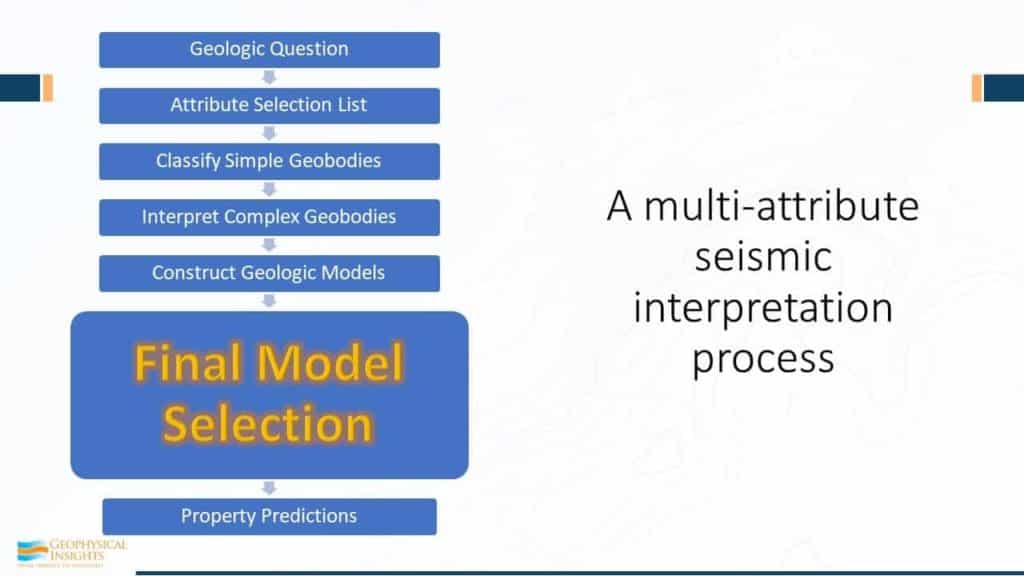

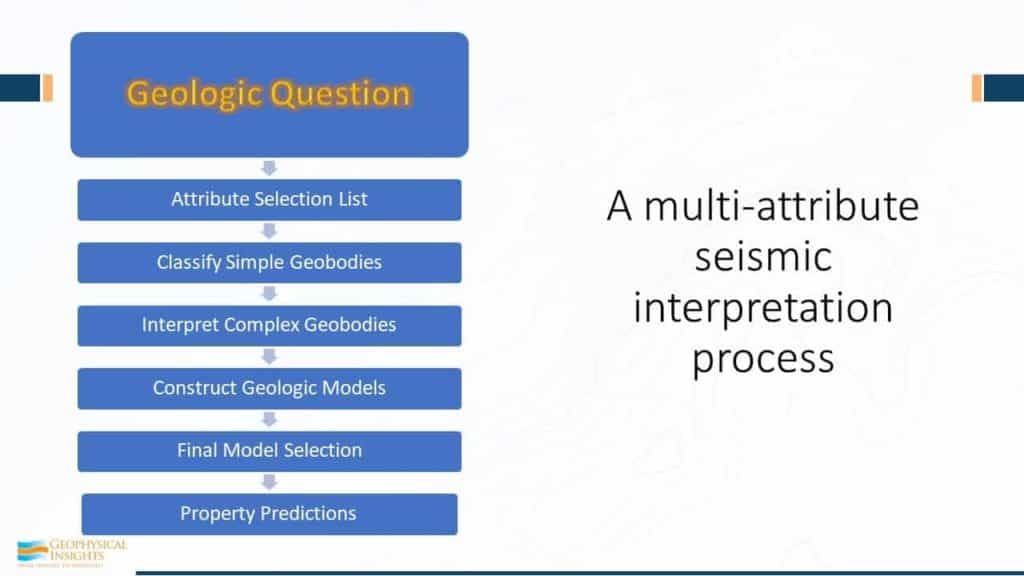

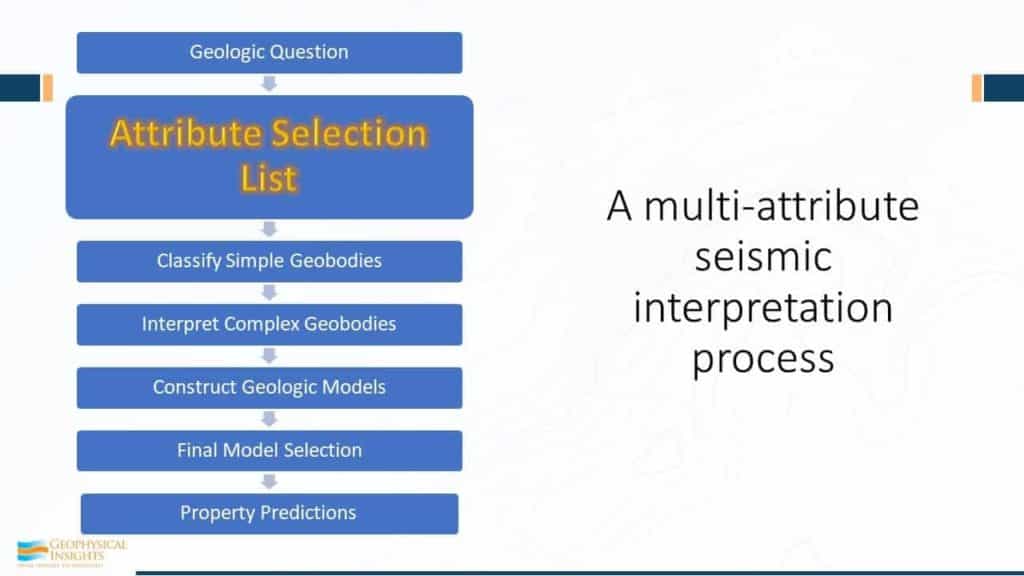

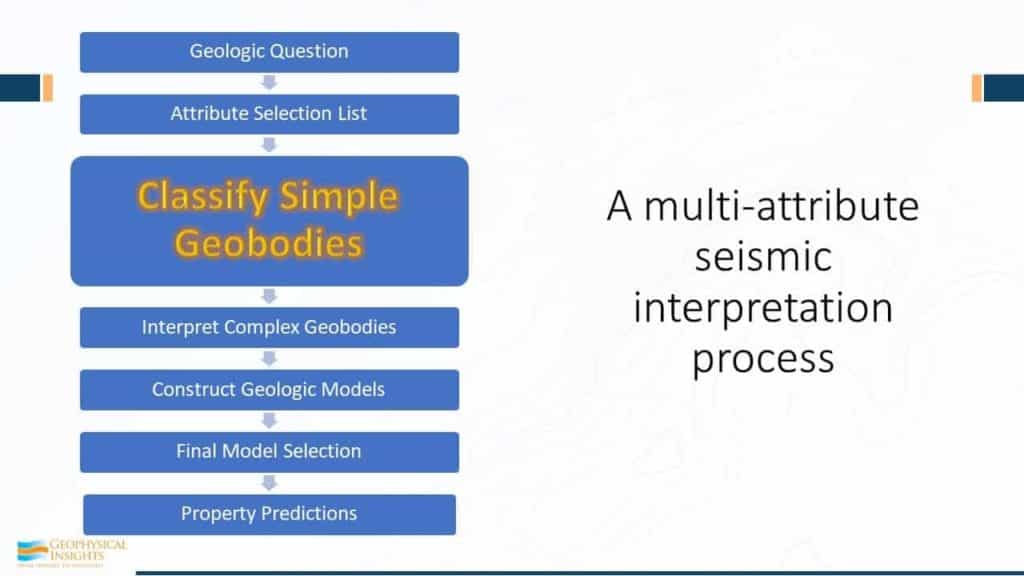

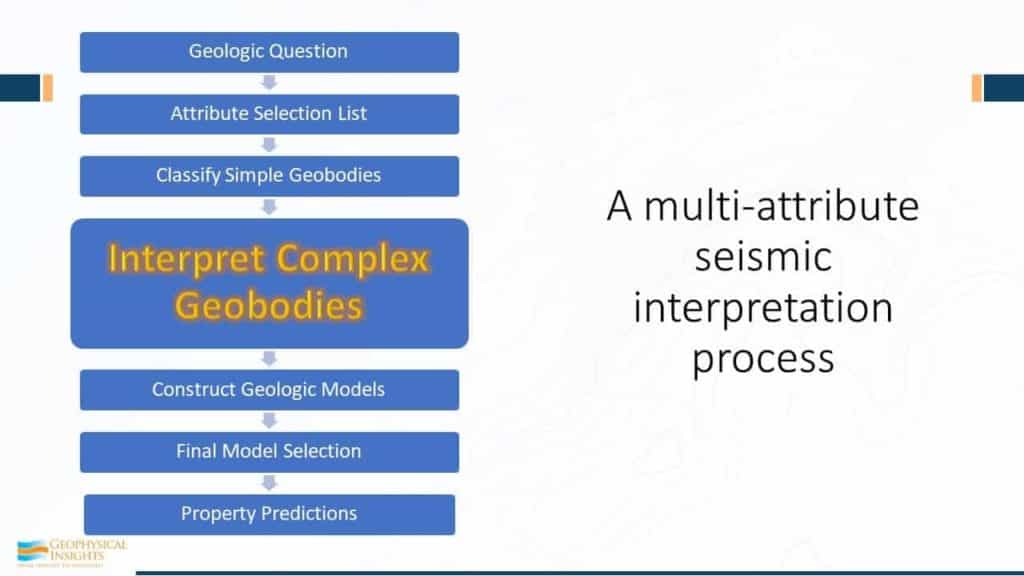

Over the last several years, the industry has invested heavily in Machine Learning (ML) for better predictions and automation. Dramatic results have been realized in exploration, field development, and production optimization. However, many of these applications have been single use ‘point’ solutions. There is a growing body of evidence that seismic analysis is best served using a combination of ML tools for a specific objective, referred to as ML Orchestration. This talk demonstrates how the Paradise AI workbench applications are used in an integrated workflow to achieve superior results than traditional interpretation methods or single-purpose ML products. Using examples from combining ML-based Fault Detection and Stratigraphic Analysis, the talk will show how ML orchestration produces value for exploration and field development by the interpreter leveraging ML orchestration.

An innovative Fault Pattern Detection Methodology has been carried out using a combination of Machine Learning Techniques to produce a seismic volume suitable for fault interpretation in a structurally and stratigraphic complex field. Through theory and results, the main objective was to demonstrate that a combination of ML tools can generate superior results in comparison with traditional attribute extraction and data manipulation through conventional algorithms. The ML technologies applied are a supervised, deep learning, fault classification followed by an unsupervised, multi-attribute classification combining fault probability and instantaneous attributes.

Thomas Chaparro is a Senior Geophysicist who specializes in training and preparing AI-based workflows. Thomas also has experience as a processing geophysicist and 2D and 3D seismic data processing. He has participated in projects in the Gulf of Mexico, offshore Africa, the North Sea, Australia, Alaska, and Brazil.

Thomas holds a bachelor’s degree in Geology from Northern Arizona University and a Master’s in Geophysics from the University of California, San Diego. His research focus was computational geophysics and seismic anisotropy.

Bachelor’s Degree in Geophysical Engineering from Central University in Venezuela with a specialization in Reservoir Characterization from Simon Bolivar University.

Over 20 years exploration and development geophysical experience with extensive 2D and 3D seismic interpretation including acquisition and processing.

Aldrin spent his formative years working on exploration activity in PDVSA Venezuela followed by a period working for a major international consultant company in the Gulf of Mexico (Landmark, Halliburton) as a G&G consultant. Latterly he was working at Helix in Scotland, UK on producing assets in the Central and South North Sea. From 2007 to 2021, he has been working as a Senior Seismic Interpreter in Dubai involved in different dedicated development projects in the Caspian Sea.

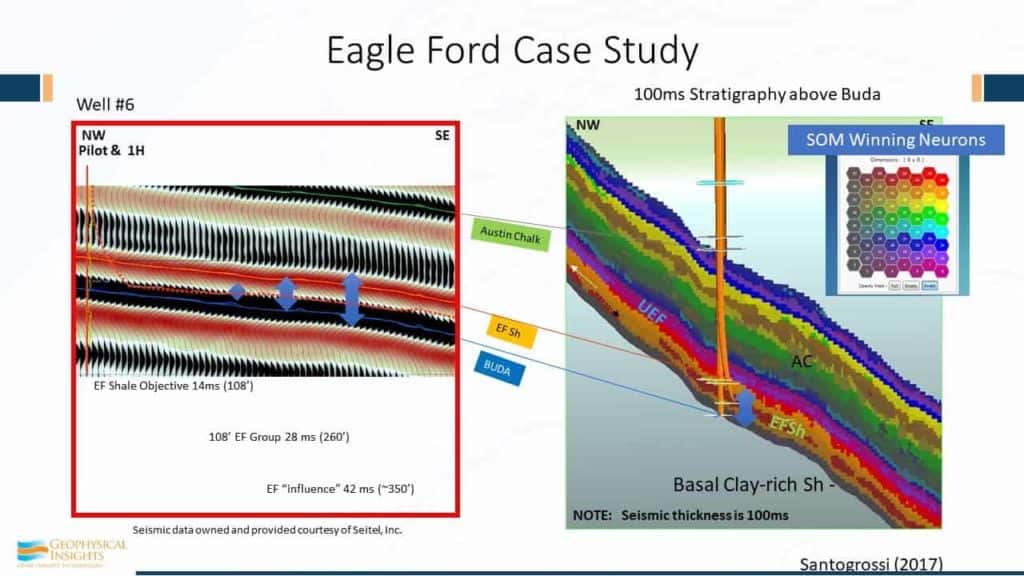

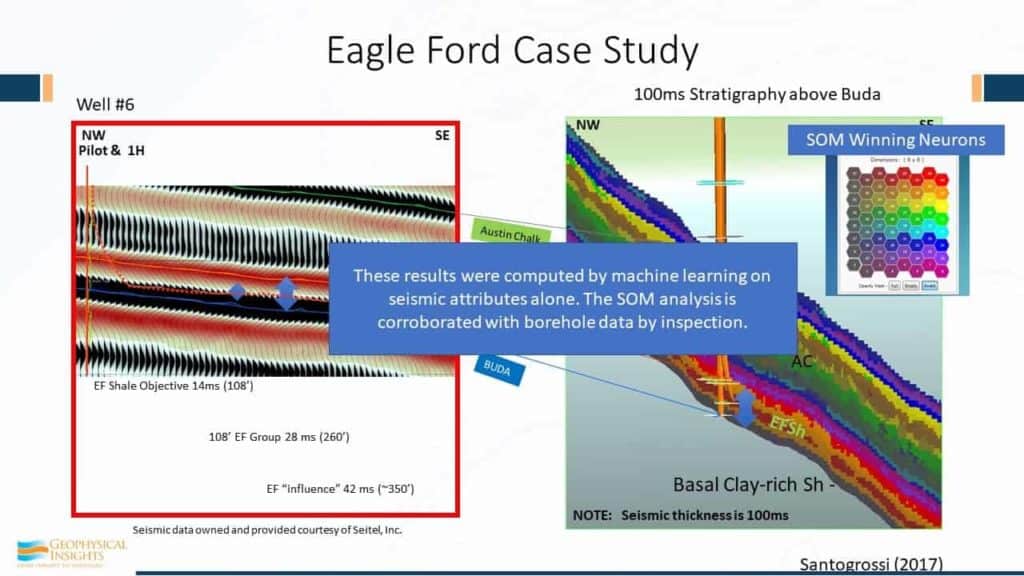

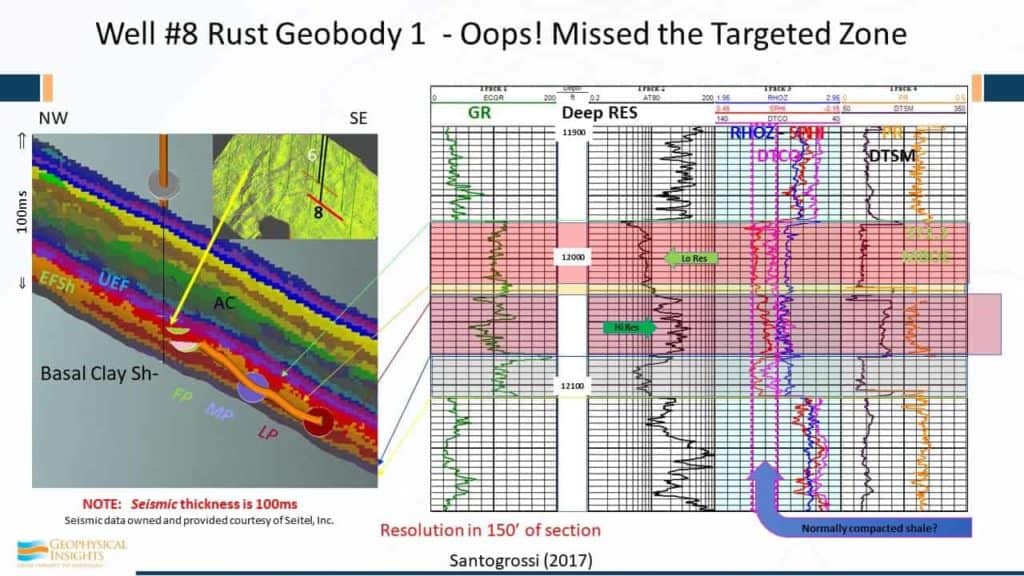

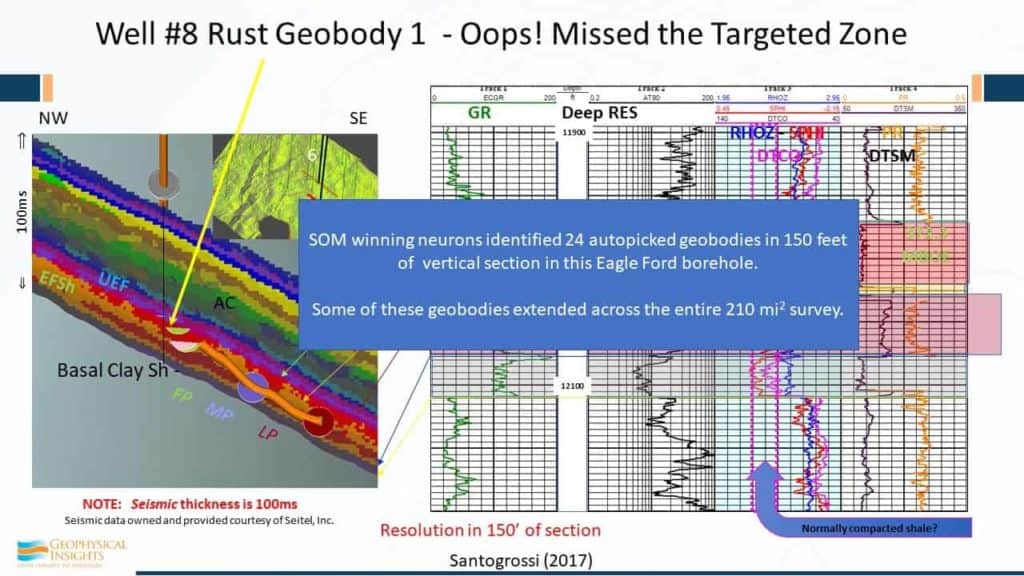

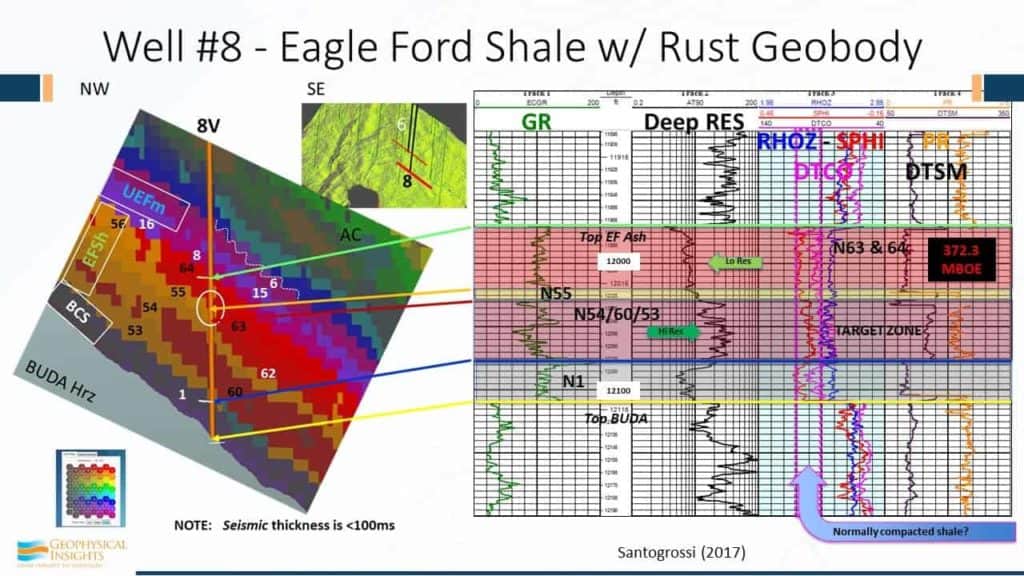

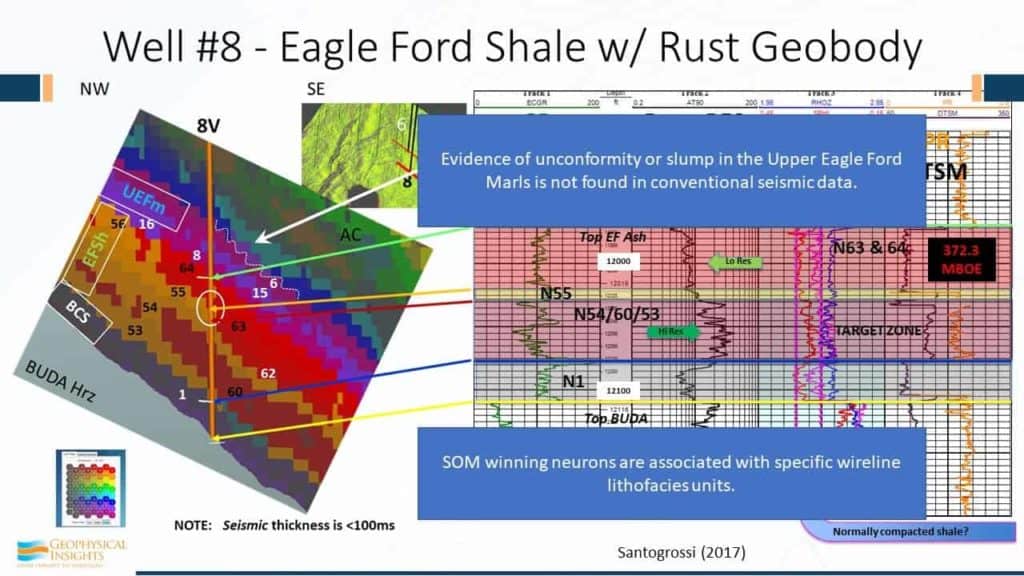

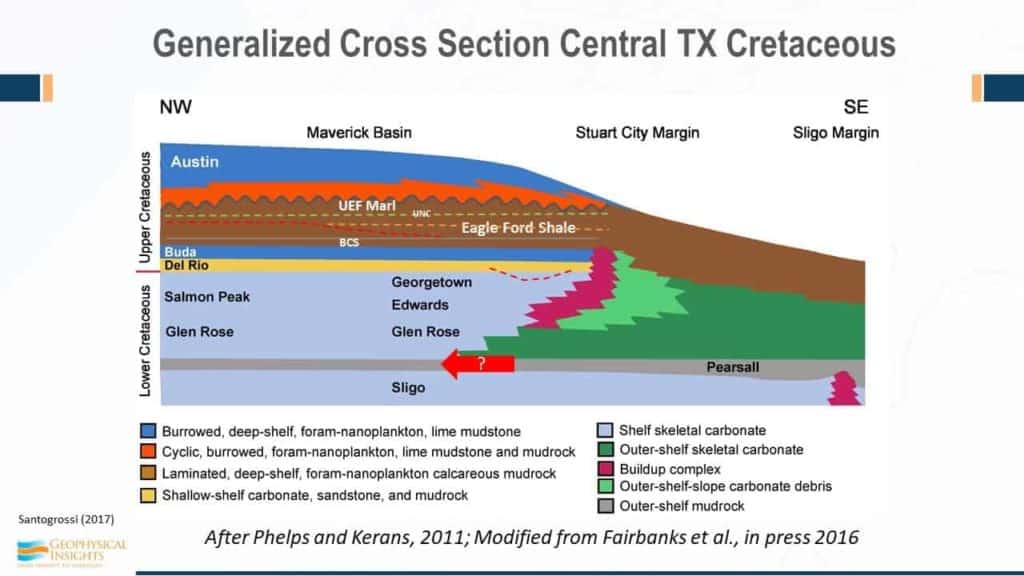

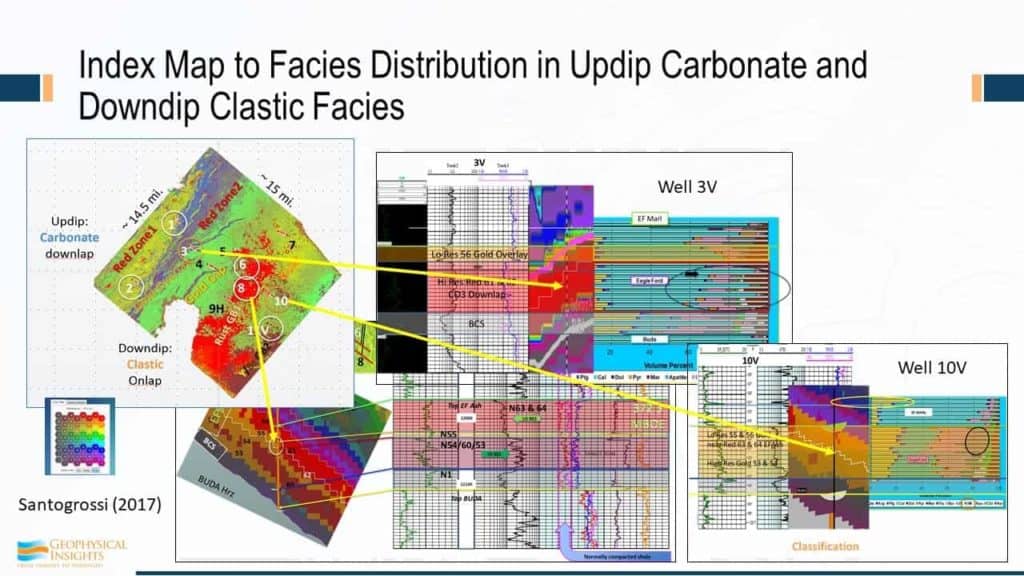

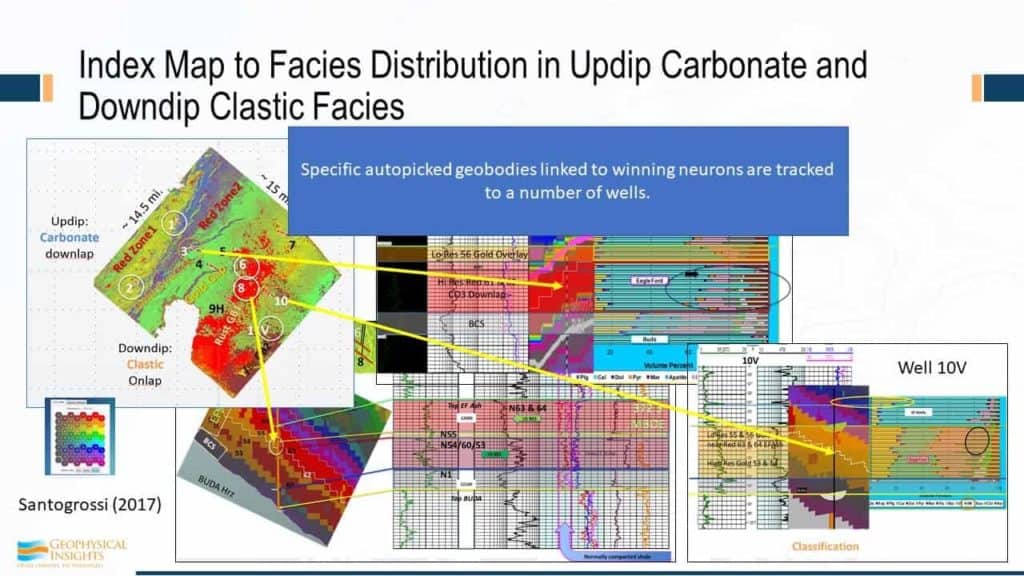

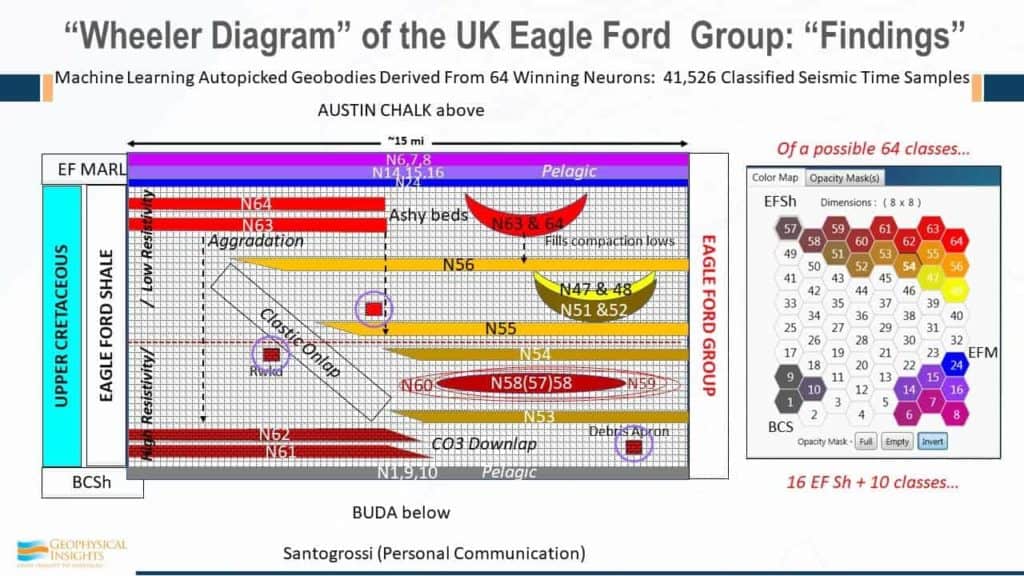

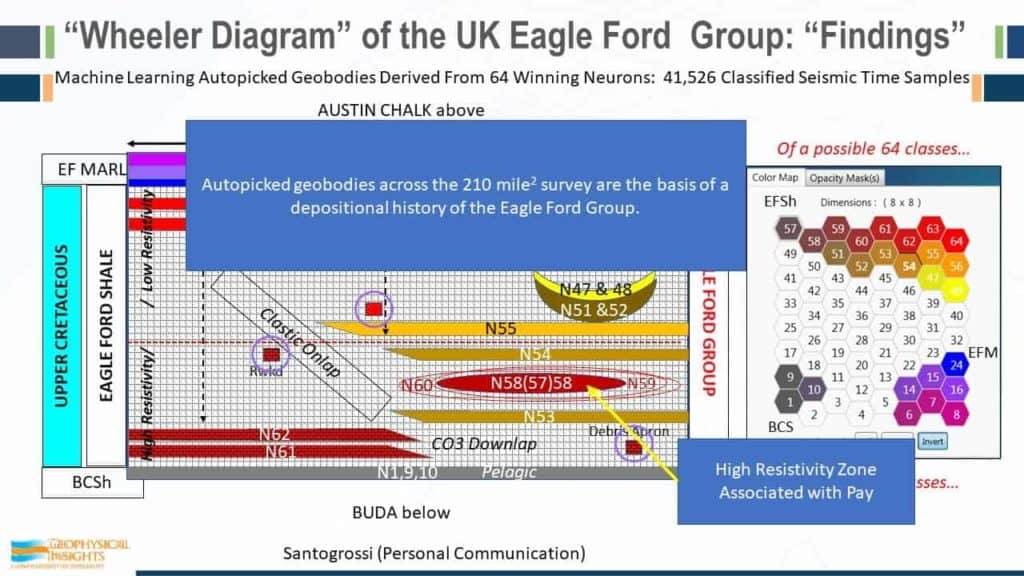

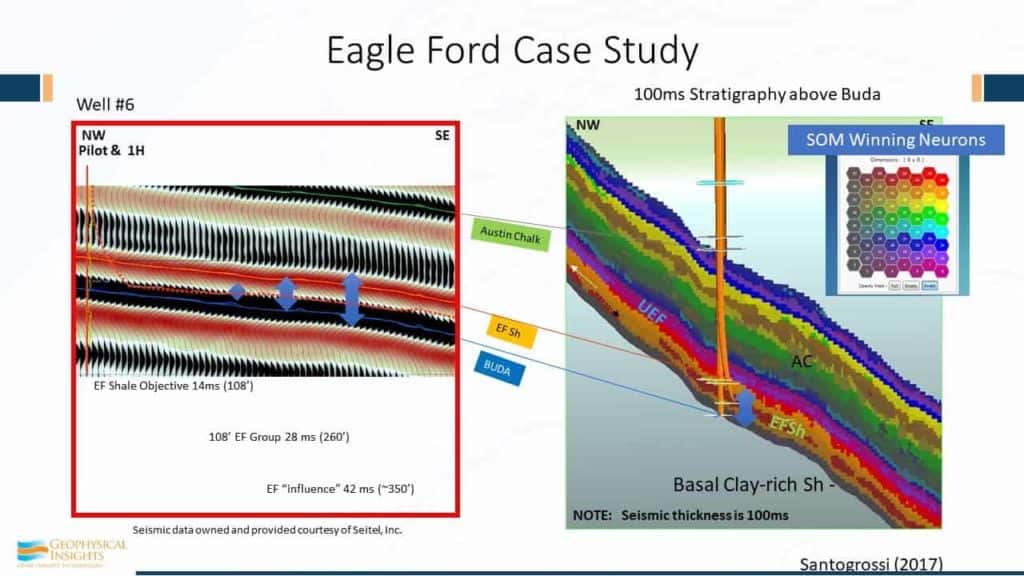

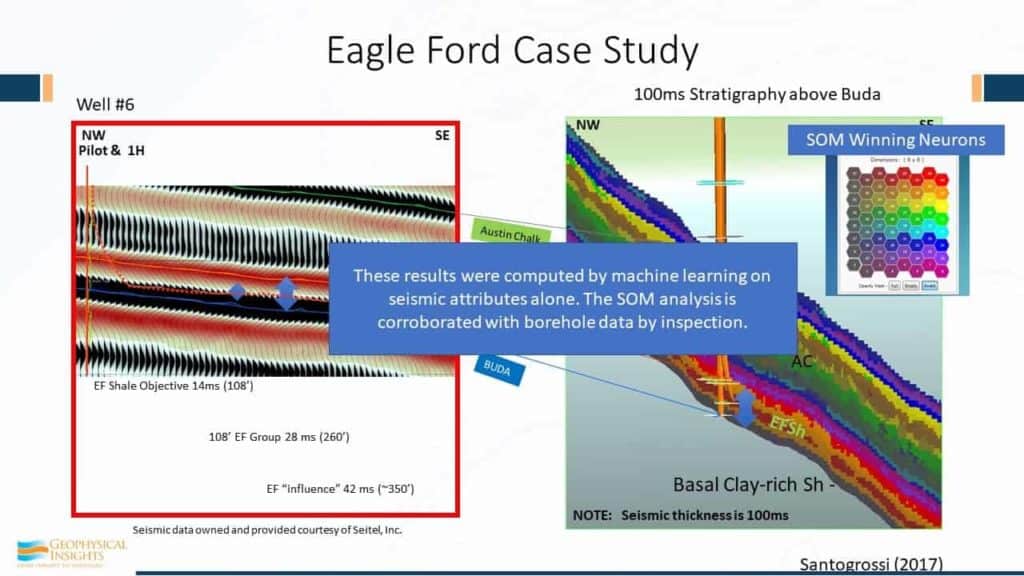

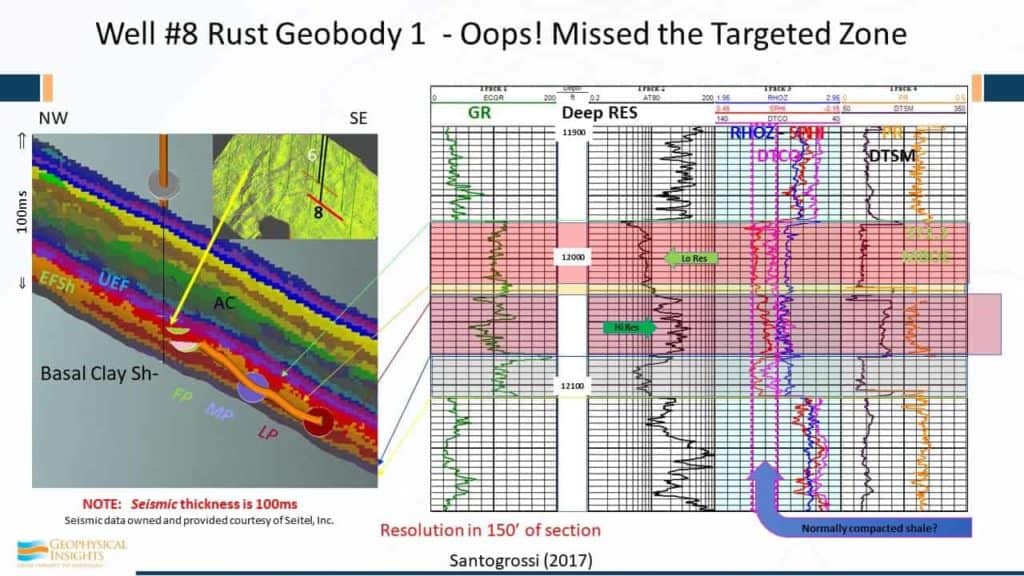

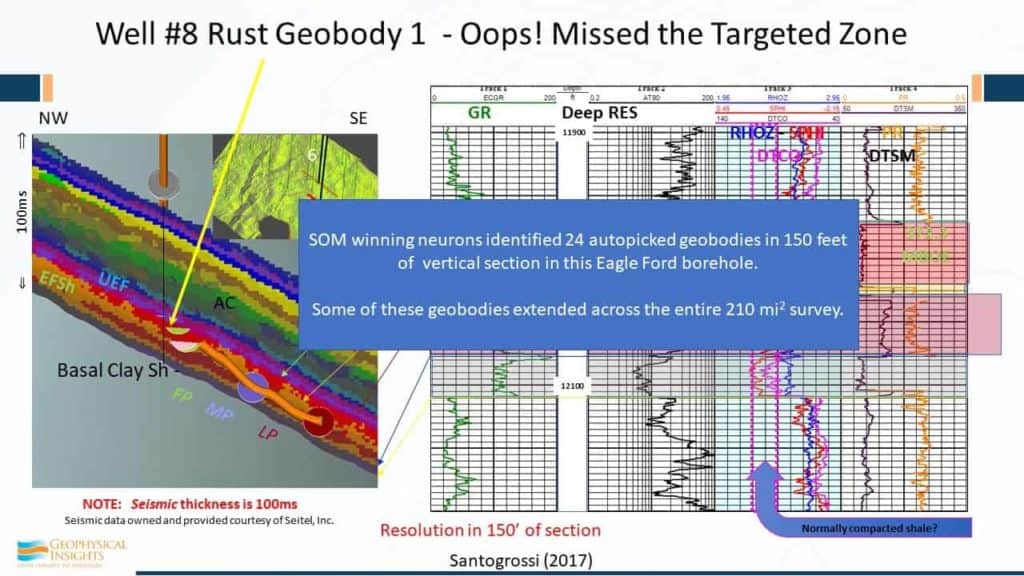

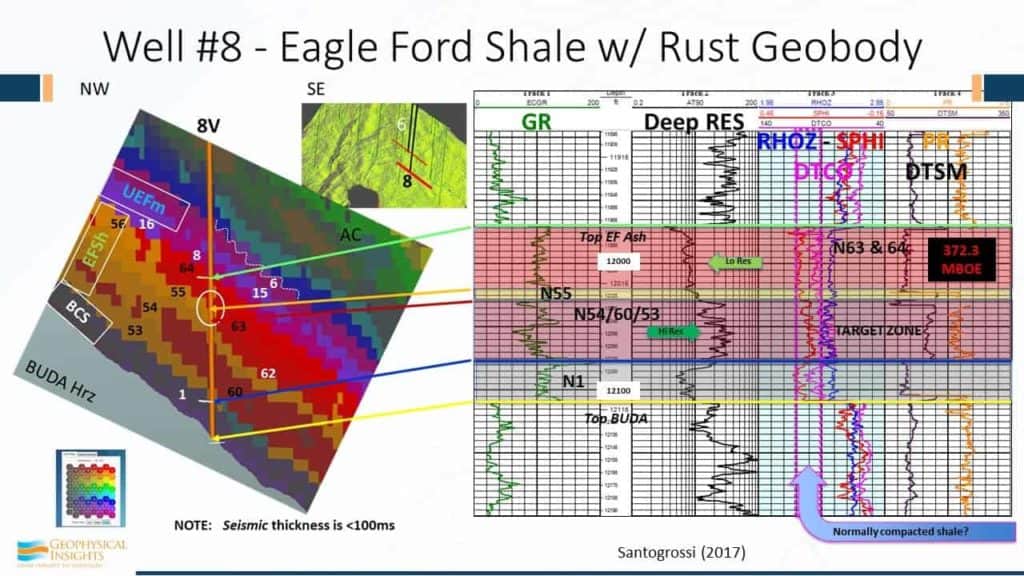

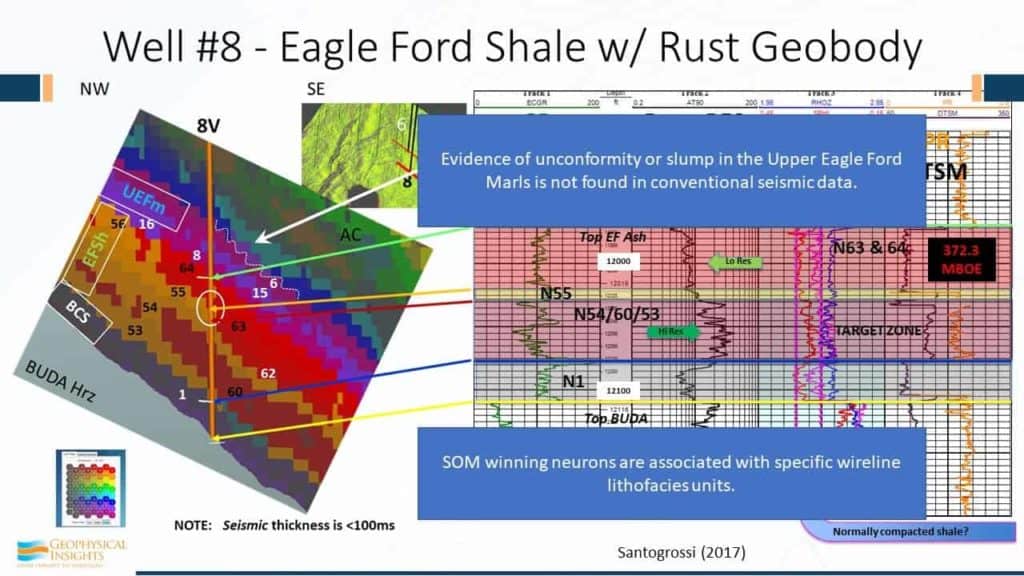

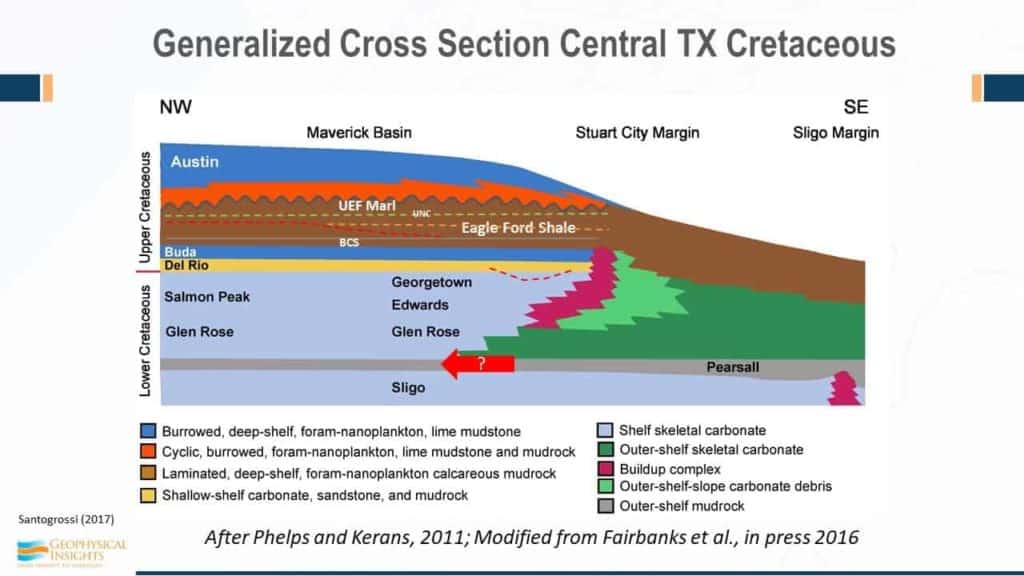

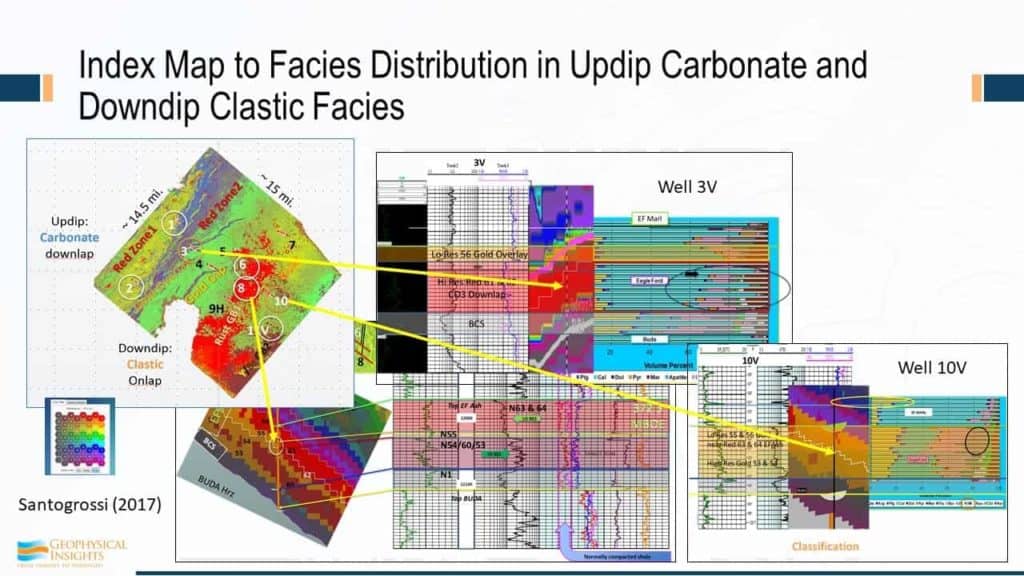

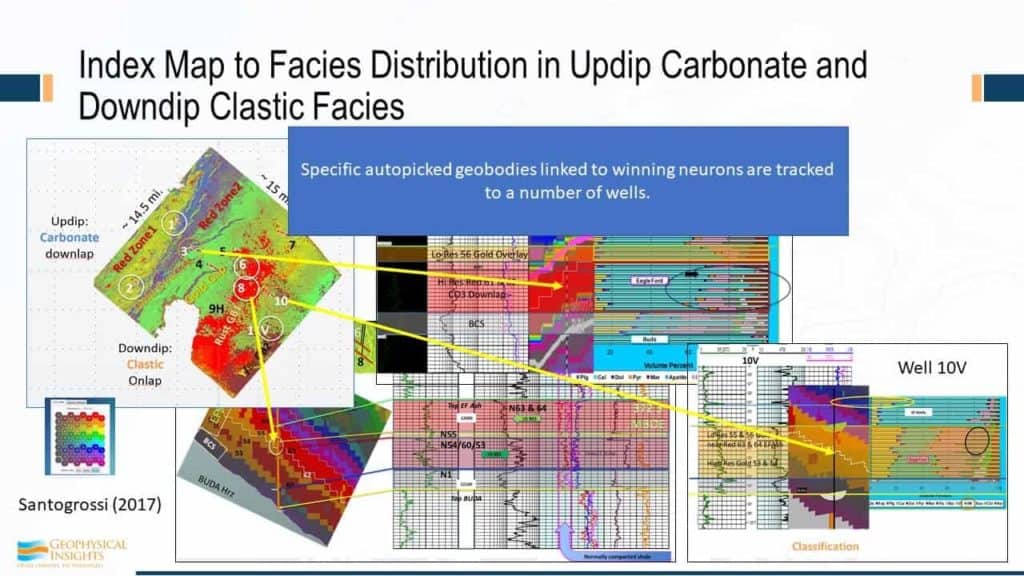

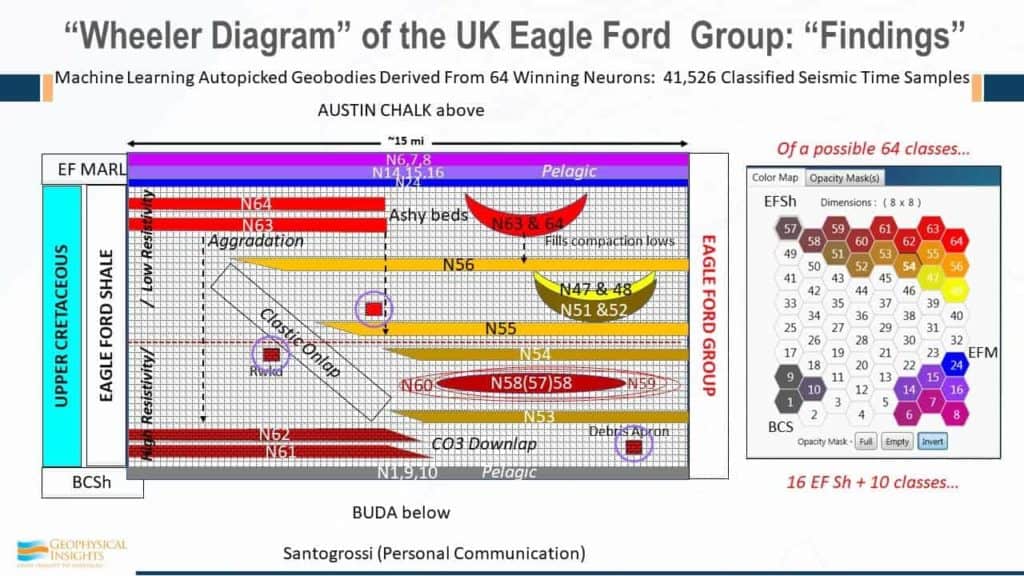

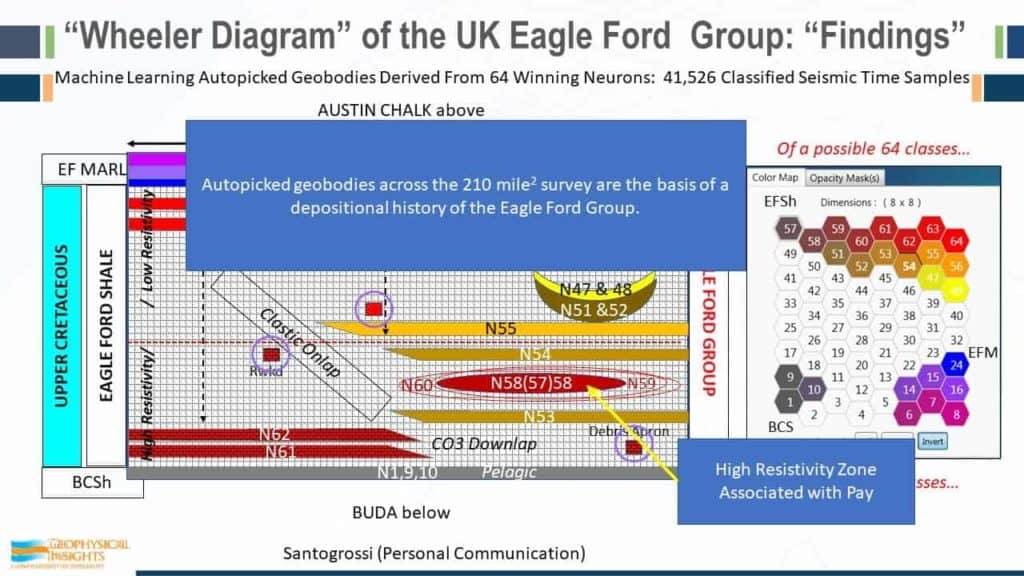

The key to understanding Clastic reservoirs in Paradise starts with good synthetic ties to the wavelet data. If one is not tied correctly, then it will be easy to mis-interpret the neurons as reservoir, whin they are not. Secondly, the workflow should utilize Principal Component Analysis to better understand the zone of interest and the attributes to use in the SOM analysis. An important part to interpretation is understanding “Halo” and “Trailing” neurons as part of the stack around a reservoir or potential reservoir. Deep, high-pressured reservoirs often “leak” or have vertical percolation into the seal. This changes the rock properties enough in the seal to create a “halo” effect in SOM. Likewise, the frequency changes of the seismic can cause a subtle “dim-out”, not necessarily observable in the wavelet data, but enough to create a different pattern in the Earth in terms of these rock property changes. Case histories for Halo and trailing neural information include deep, pressured, Chris R reservoir in Southern Louisiana, Frio pay in Southeast Texas and AVO properties in the Yegua of Wharton County. Additional case histories to highlight interpretation include thin-bed pays in Brazoria County, including updated information using CNN fault skeletonization. Continuing the process of interpretation is showing a case history in Wharton County on using Low Probability to help explore Wilcox reservoirs. Lastly, a look at using Paradise to help find sweet spots in unconventional reservoirs like the Eagle Ford, a case study provided by Patricia Santigrossi.

Machine Learning in the Cloud will address the capabilities of the Paradise AI Workbench, featuring on-demand access enabled by the flexible hardware and storage facilities available on Amazon Web Services (AWS) and other commercial cloud services. Like the on-premise instance, Paradise On-Demand provides guided workflows to address many geologic challenges and investigations. The presentation will show how geoscientists can accomplish the following workflows quickly and effectively using guided ThoughtFlows® in Paradise:

Attend the talk to see how ML applications are combined through a process called "Machine Learning Orchestration," proven to extract more from seismic and well data than traditional means.

The Oligocene Frio gas-producing Stratton Field in south Texas is a well-known field. Like many onshore fields, the productive sand channels are difficult to identify using conventional seismic data. However, the productive channels can be easily defined by employing several Paradise modules, including unsupervised machine learning, Principal Component Analysis, Self-Organizing Maps, 3D visualization, and the new Well Log Cross Section and Well Log Crossplot tools. The Well Log Cross Section tool generates extracted seismic data, including SOMs, along the Cross Section boreholes and logs. This extraction process enables the interpreter to accurately identify the SOM neurons associated with pay versus neurons associated with non-pay intervals. The reservoir neurons can be visualized throughout the field in the Paradise 3D Viewer, with Geobodies generated from the neurons. With this ThoughtFlow®, pay intervals previously difficult to see in conventional seismic can finally be visualized and tied back to the well data.

No matter where you are in your career, your online “personal brand” has a huge impact on providing opportunity for prospective jobs and garnering the respect and visibility needed for advancement. While geoscientists tackle ambitious projects, publish in technical papers, and work hard to advance their careers, often, the value of these isn’t realized beyond their immediate professional circle. Learn how to…

As a 20-year marketing veteran marketing in oil and gas and serial entrepreneur, Laura has deep experience in bringing technology products to market and growing sales pipeline. Armed with a marketing degree from Texas A&M, she began her career doing technical writing for Schlumberger and ExxonMobil in 2001. She started Advertas as a co-founder in 2004 and began to leverage her upstream experience in marketing. In 2006, she co-founded the cyber-security software company, 2FA Technology. After growing 2FA from a startup to 75% market share in target industries, and the subsequent sale of the company, she returned to Advertas to continue working toward the success of her clients, such as Geophysical Insights. Today, she guides strategy for large-scale marketing programs, manages project execution, cultivates relationships with industry media, and advocates for data-driven, account-based marketing practices.

The first stage of the proposed statistical method has proven to be very useful in testing whether or not there is a relationship between two qualitative variables (nominal or ordinal) or categorical quantitative variables, in the fields of health and social sciences. Its application in the oil industry allows geoscientists not only to test dependence between discrete variables, but to measure their degree of correlation (weak, moderate or strong). This article shows its application to reveal the relationship between a SOM classification volume of a set of nine seismic attributes (whose vertical sampling interval is three meters) and different well data (sedimentary facies, Net Reservoir, and effective porosity grouped by ranges). The data were prepared to construct the contingency tables, where the dependent (response) variable and independent (explanatory) variable were defined, the observed frequencies were obtained, and the frequencies that would be expected if the variables were independent were calculated and then the difference between the two magnitudes was studied using the contrast statistic called Chi-Square. The second stage implies the calibration of the SOM volume extracted along the wellbore path through statistical analysis of the petrophysical properties VCL and PHIE, and SW for each neuron, which allowed to identify the neurons with the best petrophysical values in a carbonate reservoir.

Heather Bedle received a B.S. (1999) in physics from Wake Forest University, and then worked as a systems engineer in the defense industry. She later received a M.S. (2005) and a Ph. D. (2008) degree from Northwestern University. After graduate school, she joined Chevron and worked as both a development geologist and geophysicist in the Gulf of Mexico before joining Chevron’s Energy Technology Company Unit in Houston, TX. In this position, she worked with the Rock Physics from Seismic team analyzing global assets in Chevron’s portfolio. Dr. Bedle is currently an assistant professor of applied geophysics at the University of Oklahoma’s School of Geosciences. She joined OU in 2018, after instructing at the University of Houston for two years. Dr. Bedle and her student research team at OU primarily work with seismic reflection data, using advanced techniques such as machine learning, attribute analysis, and rock physics to reveal additional structural, stratigraphic and tectonic insights of the subsurface.

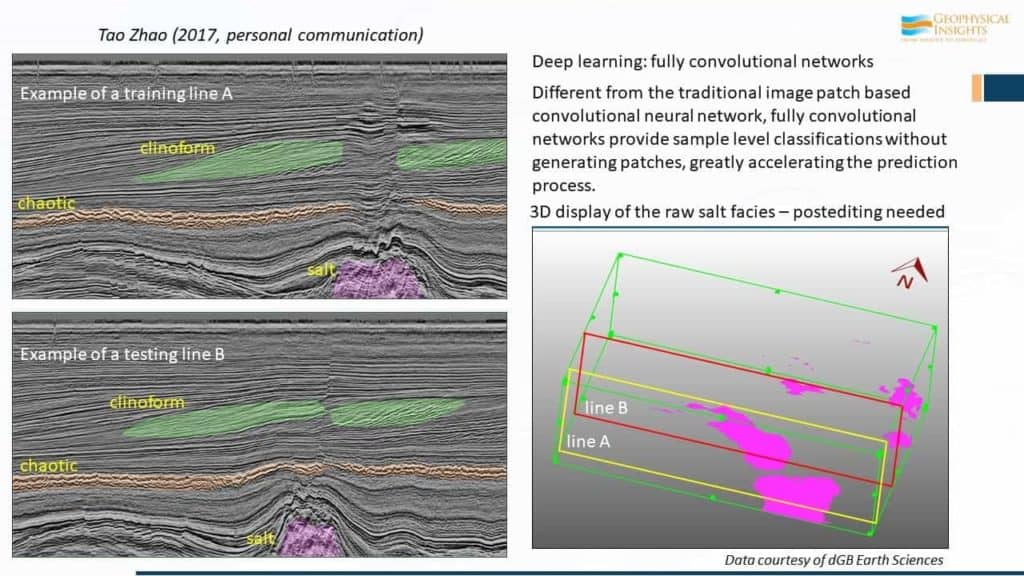

Seismic fault detection is one of the top critical procedures in seismic interpretation. Identifying faults are significant for characterizing and finding the potential oil and gas reservoirs. Seismic amplitude data exhibiting good resolution and a high signal-to-noise ratio are key to identifying structural discontinuities using seismic attributes or machine learning techniques, which in turn serve as input for automatic fault extraction. Deep learning Convolutional Neural Networks (CNN) performs well on fault detection without any human-computer interactive work. This study shows an integrated CNN-based fault detection workflow to construct fault images that are sufficiently smooth for subsequent fault automatic extraction. The objectives were to suppress noise or stratigraphic anomalies subparallel to reflector dip, and sharpen fault and other discontinuities that cut reflectors, preconditioning the fault images for subsequent automatic extraction. A 2D continuous wavelet transform-based acquisition footprint suppression method was applied time slice by time slice to suppress wavenumber components to avoid interpreting the acquisition footprint as artifacts by the CNN fault detection method. To further suppress cross-cutting noise as well as sharpen fault edges, a principal component edge-preserving structure-oriented filter is also applied. The conditioned amplitude volume is then fed to a pre-trained CNN model to compute fault probability. Finally, a Laplacian of Gaussian filter is applied to the original CNN fault probability to enhance fault images. The resulting fault probability volume is favorable with respect to traditional human-interpreter generated on vertical slices through the seismic amplitude volume.

We introduce an integrated machine learning-based fault classification workflow that creates fault component classification volumes that greatly reduces the burden on the human interpreter. We first compute a 3D fault probability volume from pre-conditioned seismic amplitude data using a 3D convolutional neural network (CNN). However, the resulting “fault probability” volume delineates other non-fault edges such as angular unconformities, the base of mass transport complexes, and noise such as acquisition footprint. We find that image processing-based fault discontinuity enhancement and skeletonization methods can enhance the fault discontinuities and suppress many of the non-fault discontinuities. Although each fault is characterized by its dip and azimuth, these two properties are discontinuous at azimuths of φ=±180° and for near vertical faults for azimuths φ and φ+180° requiring them to be parameterized as four continuous geodetic fault components. These four fault components as well as the fault probability can then be fed into a self-organizing map (SOM) to generate fault component classification. We find that the final classification result can segment fault sets trending in interpreter-defined orientations and minimize the impact of stratigraphy and noise by selecting different neurons from the SOM 2D neuron color map.

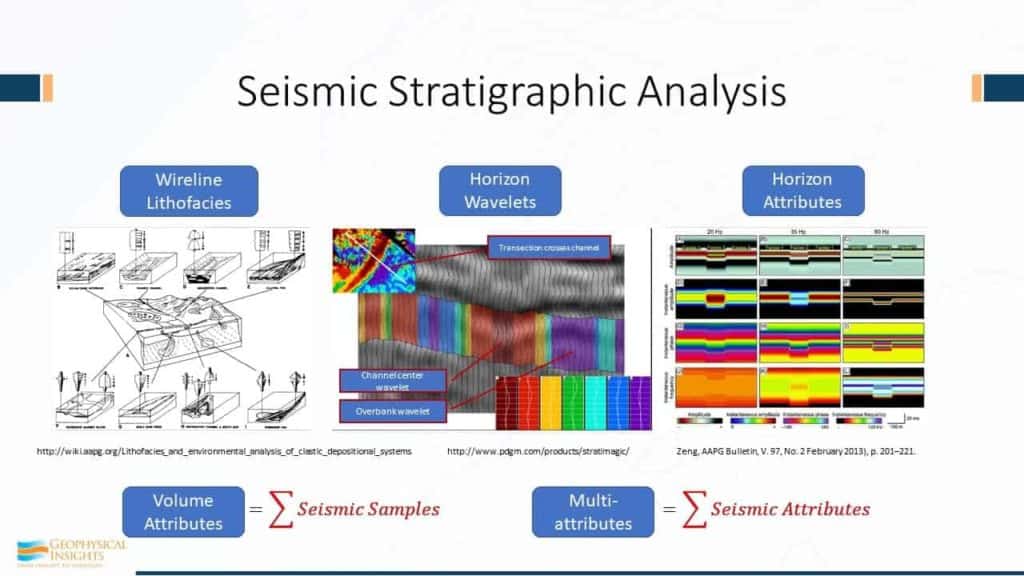

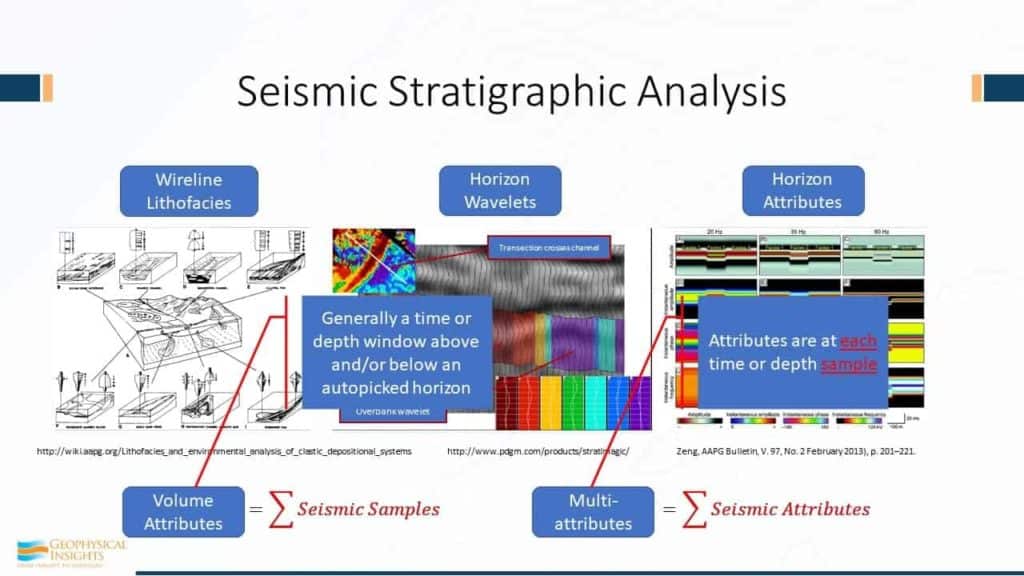

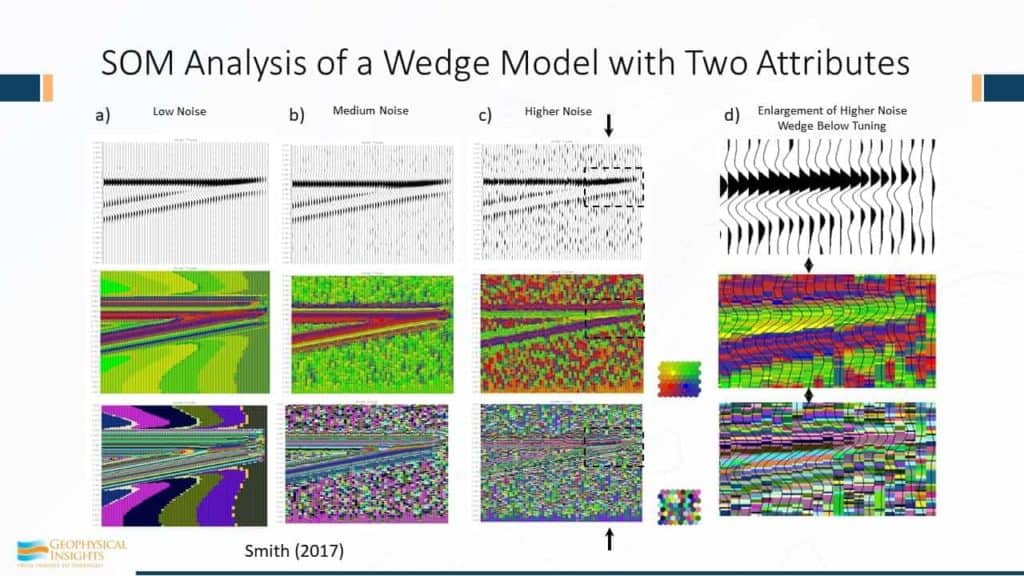

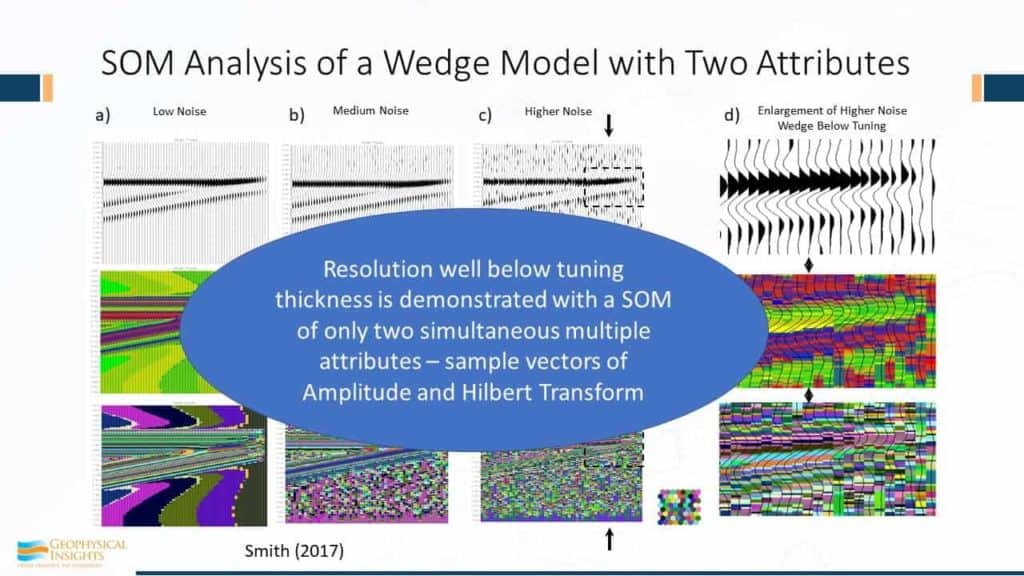

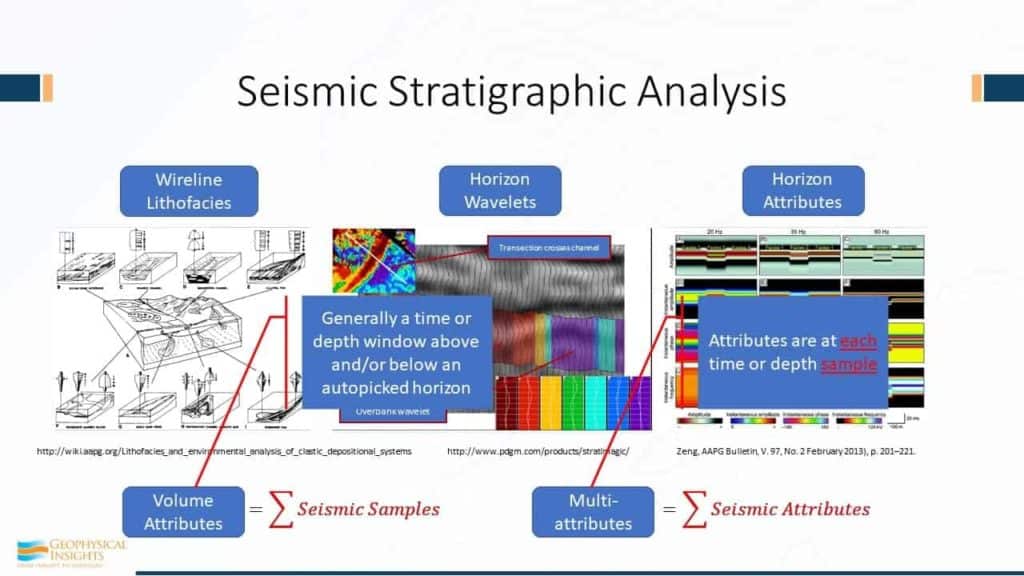

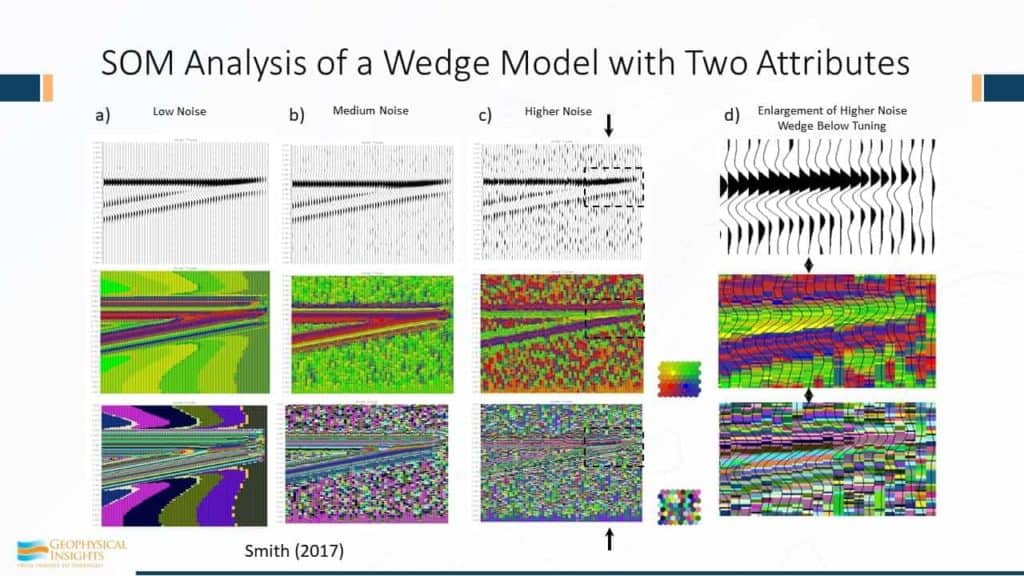

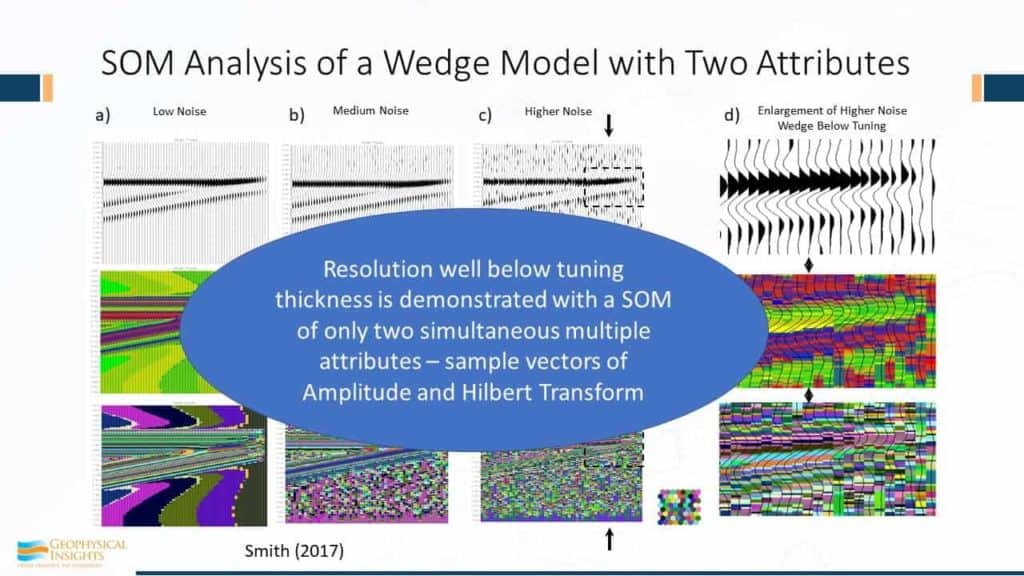

Interpreters rely on seismic pattern changes to identify and map geologic features of importance. The ability to recognize such features depends on the seismic resolution and characteristics of seismic waveforms. With the advancement of machine learning algorithms, new methods for interpreting seismic data are being developed. Among these algorithms, self-organizing maps (SOM) provides a different approach to extract geological information from a set of seismic attributes.

SOM approximates the input patterns by a finite set of processing neurons arranged in a regular 2D grid of map nodes. Such that, it classifies multi-attribute seismic samples into natural clusters following an unsupervised approach. Since machine learning is unbiased, so the classifications can contain both geological information and coherent noise. Thus, seismic interpretation evolves into broader geologic perspectives. Additionally, SOM partitions multi-attribute samples without a priori information to guide the process (e.g., well data).

The SOM output is a new seismic attribute volume, in which geologic information is captured from the classification into winning neurons. Implicit and useful geological information are uncovered through an interactive visual inspection of winning neuron classifications. By doing so, interpreters build a classification model that aids them to gain insight into complex relationships between attribute patterns and geological features.

Despite all these benefits, there are interpretation challenges regarding whether there is an association between winning neurons and geological features. To address these issues, a bivariate statistical approach is proposed. To evaluate this analysis, three cases scenarios are presented. In each case, the association between winning neurons and net reservoir (determined from petrophysical or well log properties) at well locations is analyzed. The results show that the statistical analysis not only aid in the identification of classification patterns; but more importantly, reservoir/not reservoir classification by classical petrophysical analysis strongly correlates with selected SOM winning neurons. Confidence in interpreted classification features is gained at the borehole and interpretation is readily extended as geobodies away from the well.

Students at the University of Oklahoma have been exploring the uses of SOM techniques for the last year. This presentation will review learnings and results from a few of these research projects. Two projects have investigated the ability of SOMs to aid in identification of pore space materials – both trying to qualitatively identify gas hydrates and under-saturated gas reservoirs. A third study investigated individual attributes and SOMs in recognizing various carbonate facies in a pinnacle reef in the Michigan Basin. The fourth study took a deep dive of various machine learning algorithms, of which SOMs will be discussed, to understand how much machine learning can aid in the identification of deepwater channel architectures.

Fabian Rada joined Petroleum Oil and Gas Services, Inc (POGS) in January 2015 as Business Development Manager and Consultant to PEMEX. In Mexico, he has participated in several integrated oil and gas reservoir studies. He has consulted with PEMEX Activos and the G&G Technology group to apply the Paradise AI workbench and other tools. Since January 2015, he has been working with Geophysical Insights staff to provide and implement the multi-attribute analysis software Paradise in Petróleos Mexicanos (PEMEX), running a successful pilot test in Litoral Tabasco Tsimin Xux Asset. Mr. Rada began his career in the Venezuelan National Foundation for Seismological Research, where he participated in several geophysical projects, including seismic and gravity data for micro zonation surveys. He then joined China National Petroleum Corporation (CNPC) as QC Geophysicist until he became the Chief Geophysicist in the QA/QC Department. Then, he transitioned to a subsidiary of Petróleos de Venezuela (PDVSA), as a member of the QA/QC and Chief of Potential Field Methods section. Mr. Rada has also participated in processing land seismic data and marine seismic/gravity acquisition surveys. Mr. Rada earned a B.S. in Geophysics from the Central University of Venezuela.

Rapid advances in Machine Learning (ML) are transforming seismic analysis. Using these new tools, geoscientists can accomplish the following quickly and effectively: a combination of machine learning (ML) and deep learning applications, geoscientists apply Paradise to extract greater insights from seismic and well data for these and other objectives:

The brief introduction will orient you with the technology and examples of how machine learning is being applied to automate interpretation while generating new insights in the data.

Sarah Stanley joined Geophysical Insights in October, 2017 as a geoscience consultant, and became a full-time employee July 2018. Prior to Geophysical Insights, Sarah was employed by IHS Markit in various leadership positions from 2011 to her retirement in August 2017, including Director US Operations Training and Certification, the Operational Governance Team, and, prior to February 2013, Director of IHS Kingdom Training. Sarah joined SMT in May, 2002, and was the Director of Training for SMT until IHS Markit’s acquisition in 2011.

Prior to joining SMT Sarah was employed by GeoQuest, a subdivision of Schlumberger, from 1998 to 2002. Sarah was also Director of the Geoscience Technology Training Center, North Harris College from 1995 to 1998, and served as a voluntary advisor on geoscience training centers to various geological societies. Sarah has over 37 years of industry experience and has worked as a petroleum geoscientist in various domestic and international plays since August of 1981. Her interpretation experience includes tight gas sands, coalbed methane, international exploration, and unconventional resources.

Sarah holds a Bachelor’s of Science degree with majors in Biology and General Science and minor in Earth Science, a Master’s of Arts in Education and Master’s of Science in Geology from Ball State University, Muncie, Indiana. Sarah is both a Certified Petroleum Geologist, and a Registered Geologist with the State of Texas. Sarah holds teaching credentials in both Indiana and Texas.

Sarah is a member of the Houston Geological Society and the American Association of Petroleum Geologists, where she currently serves in the AAPG House of Delegates. Sarah is a recipient of the AAPG Special Award, the AAPG House of Delegates Long Service Award, and the HGS President’s award for her work in advancing training for petroleum geoscientists. She has served on the AAPG Continuing Education Committee and was Chairman of the AAPG Technical Training Center Committee. Sarah has also served as Secretary of the HGS, and Served two years as Editor for the AAPG Division of Professional Affairs Correlator.

Dr. Tom Smith received a BS and MS degree in Geology from Iowa State University. His graduate research focused on a shallow refraction investigation of the Manson astrobleme. In 1971, he joined Chevron Geophysical as a processing geophysicist but resigned in 1980 to complete his doctoral studies in 3D modeling and migration at the Seismic Acoustics Lab at the University of Houston. Upon graduation with the Ph.D. in Geophysics in 1981, he started a geophysical consulting practice and taught seminars in seismic interpretation, seismic acquisition and seismic processing. Dr. Smith founded Seismic Micro-Technology in 1984 to develop PC software to support training workshops which subsequently led to development of the KINGDOM Software Suite for integrated geoscience interpretation with world-wide success.

The Society of Exploration Geologists (SEG) recognized Dr. Smith’s work with the SEG Enterprise Award in 2000, and in 2010, the Geophysical Society of Houston (GSH) awarded him an Honorary Membership. Iowa State University (ISU) has recognized Dr. Smith throughout his career with the Distinguished Alumnus Lecturer Award in 1996, the Citation of Merit for National and International Recognition in 2002, and the highest alumni honor in 2015, the Distinguished Alumni Award. The University of Houston College of Natural Sciences and Mathematics recognized Dr. Smith with the 2017 Distinguished Alumni Award.

In 2009, Dr. Smith founded Geophysical Insights, where he leads a team of geophysicists, geologists and computer scientists in developing advanced technologies for fundamental geophysical problems. The company launched the Paradise® multi-attribute analysis software in 2013, which uses Machine Learning and pattern recognition to extract greater information from seismic data.

Dr. Smith has been a member of the SEG since 1967 and is a professional member of SEG, GSH, HGS, EAGE, SIPES, AAPG, Sigma XI, SSA and AGU. Dr. Smith served as Chairman of the SEG Foundation from 2010 to 2013. On January 25, 2016, he was recognized by the Houston Geological Society (HGS) as a geophysicist who has made significant contributions to the field of geology. He currently serves on the SEG President-Elect’s Strategy and Planning Committee and the ISU Foundation Campaign Committee for Forever True, For Iowa State.

This study will demonstrate an automated machine learning approach for fault detection in a 3D seismic volume. The result combines Deep Learning Convolution Neural Networks (CNN) with a conventional data pre-processing step and an image processing-based post processing approach to produce high quality fault attribute volumes of fault probability, fault dip magnitude and fault dip azimuth. These volumes are then combined with instantaneous attributes in an unsupervised machine learning classification, allowing the isolation of both structural and stratigraphic features into a single 3D volume. The workflow is illustrated on a 3D seismic volume from the Denver Julesburg Basin and a statistical analysis is used to calibrate results to well data.

Iván Dimitri Marroquín is a 20-year veteran of data science research, consistently publishing in peer-reviewed journals and speaking at international conference meetings. Dr. Marroquín received a Ph.D. in geophysics from McGill University, where he conducted and participated in 3D seismic research projects. These projects focused on the development of interpretation techniques based on seismic attributes and seismic trace shape information to identify significant geological features or reservoir physical properties. Examples of his research work are attribute-based modeling to predict coalbed thickness and permeability zones, combining spectral analysis with coherency imagery technique to enhance interpretation of subtle geologic features, and implementing a visual-based data mining technique on clustering to match seismic trace shape variability to changes in reservoir properties.

Dr. Marroquín has also conducted some ground-breaking research on seismic facies classification and volume visualization. This lead to his development of a visual-based framework that determines the optimal number of seismic facies to best reveal meaningful geologic trends in the seismic data. He proposed seismic facies classification as an alternative to data integration analysis to capture geologic information in the form of seismic facies groups. He has investigated the usefulness of mobile devices to locate, isolate, and understand the spatial relationships of important geologic features in a context-rich 3D environment. In this work, he demonstrated mobile devices are capable of performing seismic volume visualization, facilitating the interpretation of imaged geologic features. He has definitively shown that mobile devices eventually will allow the visual examination of seismic data anywhere and at any time.

In 2016, Dr. Marroquín joined Geophysical Insights as a senior researcher, where his efforts have been focused on developing machine learning solutions for the oil and gas industry. For his first project, he developed a novel procedure for lithofacies classification that combines a neural network with automated machine methods. In parallel, he implemented a machine learning pipeline to derive cluster centers from a trained neural network. The next step in the project is to correlate lithofacies classification to the outcome of seismic facies analysis. Other research interests include the application of diverse machine learning technologies for analyzing and discerning trends and patterns in data related to oil and gas industry.

Dr. Jie Qi is a Research Geophysicist at Geophysical Insights, where he works closely with product development and geoscience consultants. His research interests include machine learning-based fault detection, seismic interpretation, pattern recognition, image processing, seismic attribute development and interpretation, and seismic facies analysis. Dr. Qi received a BS (2011) in Geoscience from the China University of Petroleum in Beijing, and an MS (2013) in Geophysics from the University of Houston. He earned a Ph.D. (2017) in Geophysics from the University of Oklahoma, Norman. His industry experience includes work as a Research Assistant (2011-2013) at the University of Houston and the University of Oklahoma (2013-2017). Dr. Qi was with Petroleum Geo-Services (PGS), Inc. in 2014 as a summer intern, where he worked on a semi-supervised seismic facies analysis. In 2017, he served as a postdoctoral Research Associate in the Attributed Assisted-Seismic Processing and Interpretation (AASPI) consortium at the University of Oklahoma from 2017 to 2020.

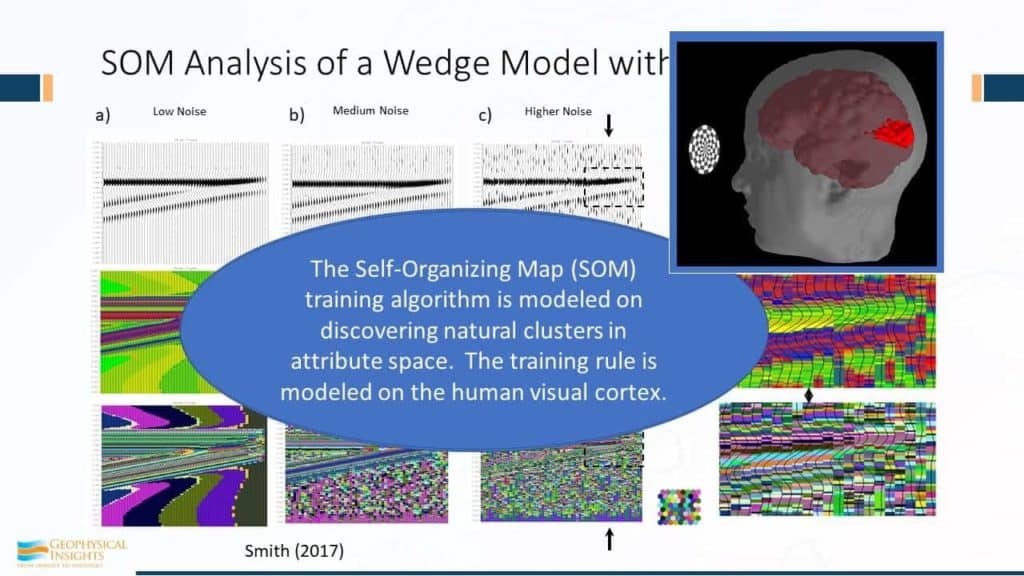

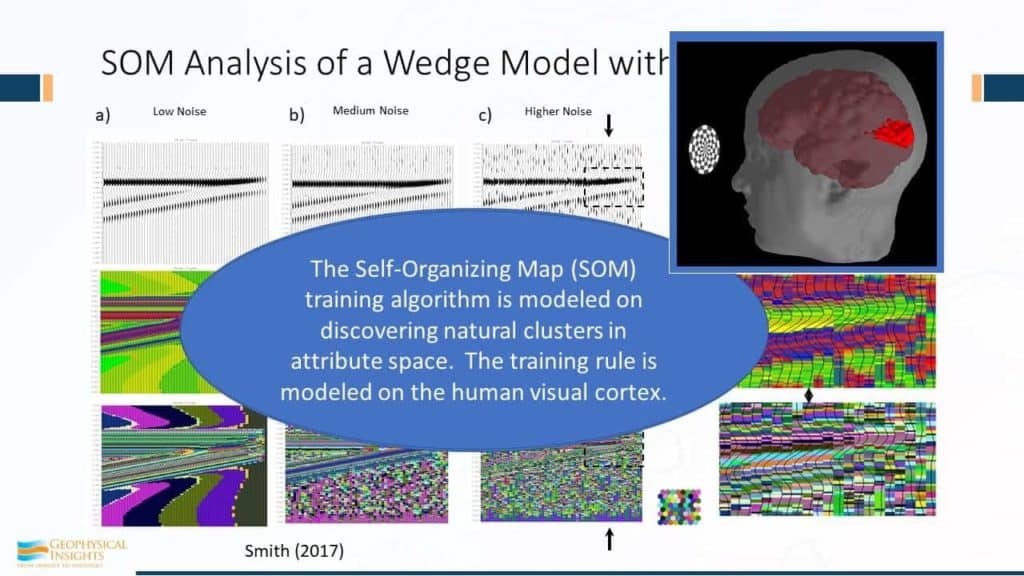

Self-organization is the nonlinear formation of spatial and temporal structures, patterns or functions in complex systems (Aschwanden et al., 2018). Simple examples of self-organization include flocks of birds, schools of fish, crystal development, formation of snowflakes, and fractals. What these examples have in common is the appearance of structure or patterns without centralized control. Self-organizing systems are typically governed by power laws, such as the Gutenberg-Richter law of earthquake frequency and magnitude. In addition, the time frames of such systems display a characteristic self-similar (fractal) response, where earthquakes or avalanches for example, occur over all possible time scales (Baas, 2002).

The existence of nonlinear dynamic systems and ordered structures in the earth are well known and have been studied for centuries and can appear as sedimentary features, layered and folded structures, stratigraphic formations, diapirs, eolian dune systems, channelized fluvial and deltaic systems, and many more (Budd, et al., 2014; Dietrich and Jacob, 2018). Each of these geologic processes and features exhibit patterns through the action of undirected local dynamics and is generally termed “self-organization” (Paola, 2014).

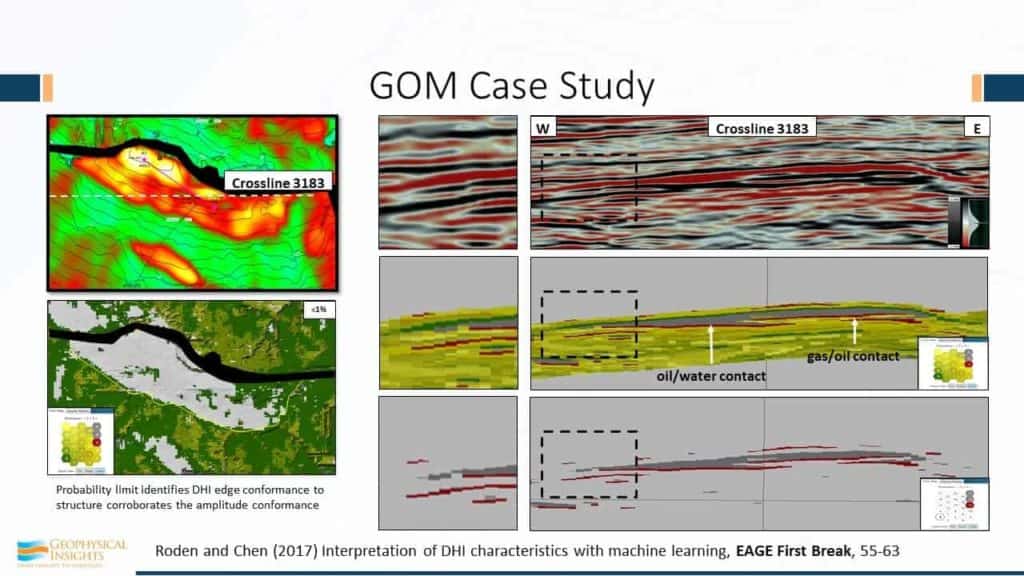

Artificial intelligence and specifically neural networks exhibit and reveal self-organization characteristics. The reason for the interest in applying neural networks stems from the fact that they are universal approximators for various kinds of nonlinear dynamical systems of arbitrary complexity (Pessa, 2008). A special class of artificial neural networks is aptly named self-organizing map (SOM) (Kohonen, 1982). It has been found that SOM can identify significant organizational structure in the form of clusters from seismic attributes that relate to geologic features (Strecker and Uden, 2002; Coleou et al., 2003; de Matos, 2006; Roy et al., 2013; Roden et al., 2015; Zhao et al., 2016; Roden et al., 2017; Zhao et al., 2017; Roden and Chen, 2017; Sacrey and Roden, 2018; Leal et al, 2019; Hussein et al., 2020; Hardage et al., 2020; Manauchehri et al., 2020). As a consequence, SOM is an excellent machine learning neural network approach utilizing seismic attributes to help identify self-organization features and define natural geologic patterns not easily seen or seen at all in the data.

Rocky R. Roden started his own consulting company, Rocky Ridge Resources Inc. in 2003 and works with several oil companies on technical and prospect evaluation issues. He is also a principal in the Rose and Associates DHI Risk Analysis Consortium and was Chief Consulting Geophysicist with Seismic Micro-technology. Rocky is a proven oil finder with 37 years in the industry, gaining extensive knowledge of modern geoscience technical approaches.

Rocky holds a BS in Oceanographic Technology-Geology from Lamar University and a MS in Geological and Geophysical Oceanography from Texas A&M University. As Chief Geophysicist and Director of Applied Technology for Repsol-YPF, his role comprised of advising corporate officers, geoscientists, and managers on interpretation, strategy and technical analysis for exploration and development in offices in the U.S., Argentina, Spain, Egypt, Bolivia, Ecuador, Peru, Brazil, Venezuela, Malaysia, and Indonesia. He has been involved in the technical and economic evaluation of Gulf of Mexico lease sales, farmouts worldwide, and bid rounds in South America, Europe, and the Far East. Previous work experience includes exploration and development at Maxus Energy, Pogo Producing, Decca Survey, and Texaco. Rocky is a member of SEG, AAPG, HGS, GSH, EAGE, and SIPES; he is also a past Chairman of The Leading Edge Editorial Board.

Bob A. Hardage received a PhD in physics from Oklahoma State University. His thesis work focused on high-velocity micro-meteoroid impact on space vehicles, which required trips to Goddard Space Flight Center to do finite-difference modeling on dedicated computers. Upon completing his university studies, he worked at Phillips Petroleum Company for 23 years and was Exploration Manager for Asia and Latin America when he left Phillips. He moved to WesternAtlas and worked 3 years as Vice President of Geophysical Development and Marketing. He then established a multicomponent seismic research laboratory at the Bureau of Economic Geology and served The University of Texas at Austin as a Senior Research Scientist for 28 years. He has published books on VSP, cross-well profiling, seismic stratigraphy, and multicomponent seismic technology. He was the first person to serve 6 years on the Board of Directors of the Society of Exploration Geophysicists (SEG). His Board service was as SEG Editor (2 years), followed by 1-year terms as First VP, President Elect, President, and Past President. SEG has awarded him a Special Commendation, Life Membership, and Honorary Membership. He wrote the AAPG Explorer column on geophysics for 6 years. AAPG honored him with a Distinguished Service award for promoting geophysics among the geological community.

Examination of vertical seismic profile (VSP) data with unsupervised machine learning technology is a rigorous way to compare the fabric of down-going, illuminating, P and S wavefields with the fabric of up-going reflections and interbed multiples created by these wavefields. This concept is introduced in this paper by applying unsupervised learning to VSP data to better understand the physics of P and S reflection seismology. The zero-offset VSP data used in this investigation were acquired in a hard-rock, fast-velocity, environment that caused the shallowest 2 or 3 geophones to be inside the near-field radiation zone of a vertical-vibrator baseplate. This study shows how to use instantaneous attributes to backtrack down-going direct-P and direct-S illuminating wavelets to the vibrator baseplate inside the near-field zone. This backtracking confirms that the points-of-origin of direct-P and direct-S are identical. The investigation then applies principal component (PCA) analysis to VSP data and shows that direct-S and direct-P wavefields that are created simultaneously at a vertical-vibrator baseplate have the same dominant principal components. A self-organizing map (SOM) approach is then taken to illustrate how unsupervised machine learning describes the fabric of down-going and up-going events embedded in vertical-geophone VSP data. These SOM results show that a small number of specific neurons build the down-going direct-P illuminating wavefield, and another small group of neurons build up-going P primary reflections and early-arriving down-going P multiples. The internal attribute fabric of these key down-going and up-going neurons are then compared to expose their similarities and differences. This initial study indicates that unsupervised machine learning, when applied to VSP data, is a powerful tool for understanding the physics of seismic reflectivity at a prospect. This research strategy of analyzing VSP data with unsupervised machine learning will now expand to horizontal-geophone VSP data.

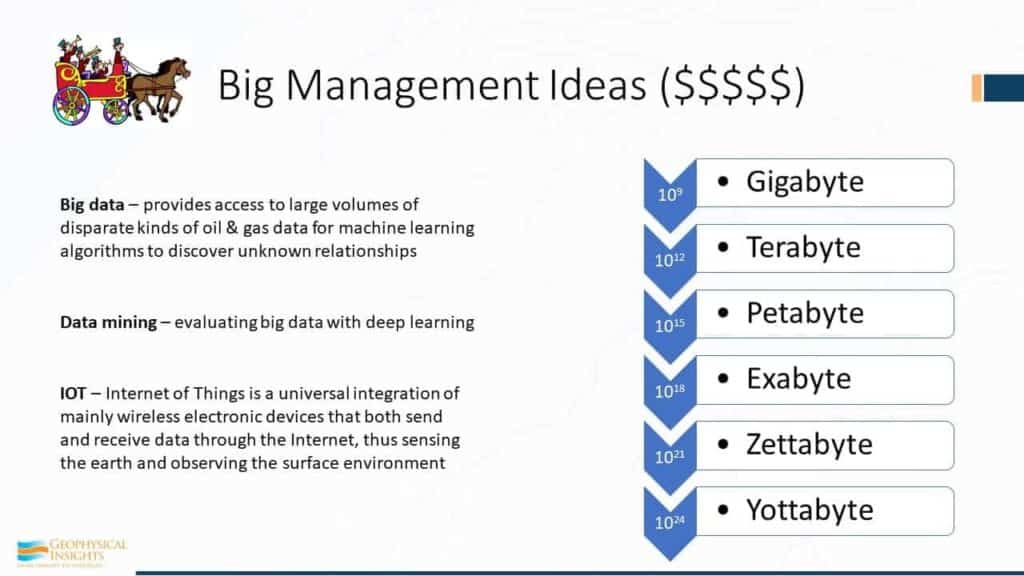

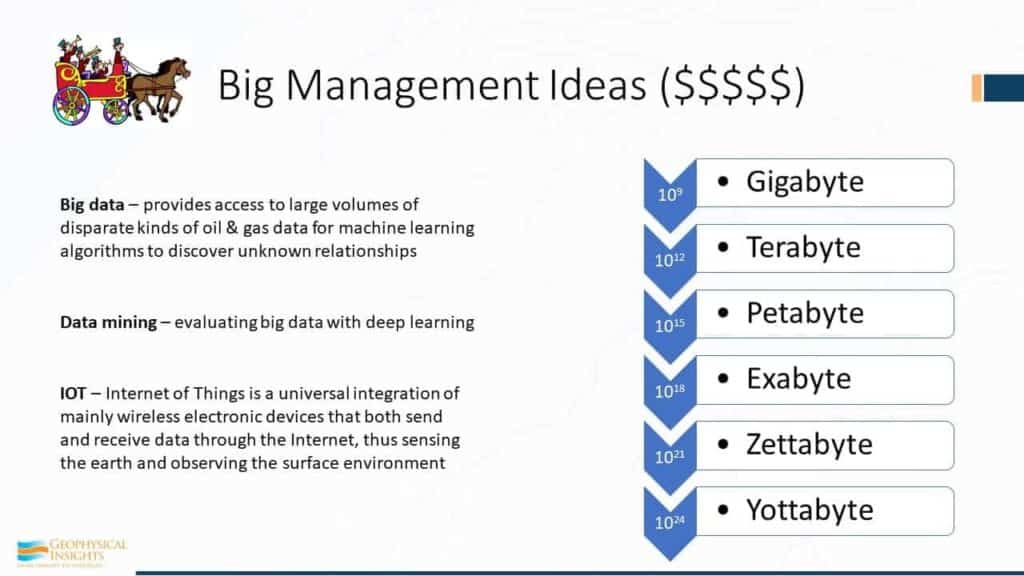

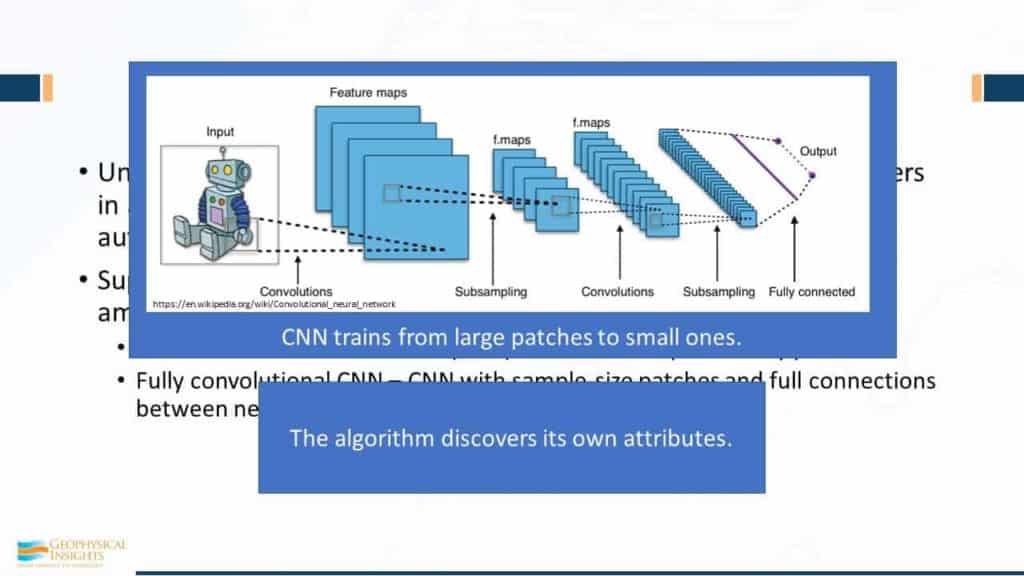

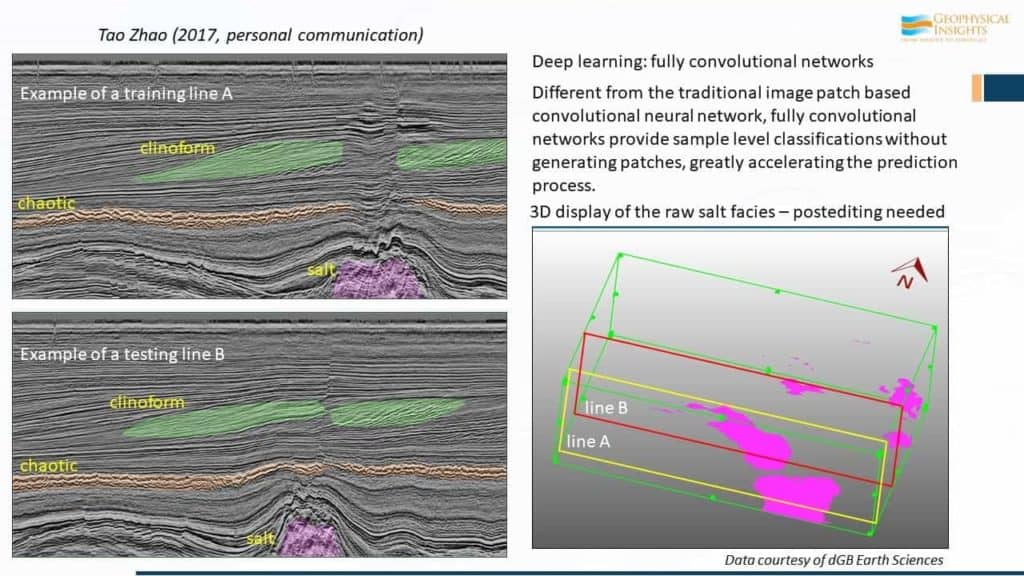

This presentation covers big-picture machine learning buzz words with humor and unassailable frankness. The goal of the material is for every geoscientist to gain confidence in these important concepts and how they add to our well-established practices, particularly seismic interpretation. Presentation topics include a machine learning historical perspective, what makes it different, a fish factory, Shazam, comparison of supervised and unsupervised machine learning methods with examples, tuning thickness, deep learning, hard/soft attribute spaces, multi-attribute samples, and several interpretation examples. After the presentation, you may not know how to run machine learning algorithms, but you should be able to appreciate their value and avoid some of their limitations.

Deborah is a geologist/geophysicist with 44 years of oil and gas exploration experience in Texas, Louisiana Gulf Coast and Mid-Continent areas of the US. She received her degree in Geology from the University of Oklahoma in 1976 and immediately started working for Gulf Oil in their Oklahoma City offices.

She started her own company, Auburn Energy, in 1990 and built her first geophysical workstation using Kingdom software in 1996. She helped SMT/IHS for 18 years in developing and testing the Kingdom Software. She specializes in 2D and 3D interpretation for clients in the US and internationally. For the past nine years she has been part of a team to study and bring the power of multi-attribute neural analysis of seismic data to the geoscience public, guided by Dr. Tom Smith, founder of SMT. She has become an expert in the use of Paradise software and has seven discoveries for clients using multi-attribute neural analysis.

Deborah has been very active in the geological community. She is past national President of SIPES (Society of Independent Professional Earth Scientists), past President of the Division of Professional Affairs of AAPG (American Association of Petroleum Geologists), Past Treasurer of AAPG and Past President of the Houston Geological Society. She is also Past President of the Gulf Coast Association of Geological Societies and just ended a term as one of the GCAGS representatives on the AAPG Advisory Council. Deborah is also a DPA Certified Petroleum Geologist #4014 and DPA Certified Petroleum Geophysicist #2. She belongs to AAPG, SIPES, Houston Geological Society, South Texas Geological Society and the Oklahoma City Geological Society (OCGS).

Michael A. Dunn is an exploration executive with extensive global experience including the Gulf of Mexico, Central America, Australia, China and North Africa. Mr. Dunn has a proven a track record of successfully executing exploration strategies built on a foundation of new and innovative technologies. Currently, Michael serves as Senior Vice President of Business Development for Geophysical Insights.

He joined Shell in 1979 as an exploration geophysicist and party chief and held increasing levels or responsibility including Manager of Interpretation Research. In 1997, he participated in the launch of Geokinetics, which completed an IPO on the AMEX in 2007. His extensive experience with oil companies (Shell and Woodside) and the service sector (Geokinetics and Halliburton) has provided him with a unique perspective on technology and applications in oil and gas. Michael received a B.S. in Geology from Rutgers University and an M.S. in Geophysics from the University of Chicago.

Hal H. Green is a marketing executive and entrepreneur in the energy industry with more than 25 years of experience in starting and managing technology companies. He holds a B.S. in Electrical Engineering from Texas A&M University and an MBA from the University of Houston. He has invested his career at the intersection of marketing and technology, with a focus on business strategy, marketing, and effective selling practices. Mr. Green has a diverse portfolio of experience in marketing technology to the hydrocarbon supply chain – from upstream exploration through downstream refining & petrochemical. Throughout his career, Mr. Green has been a proven thought-leader and entrepreneur, while supporting several tech start-ups.

He started his career as a process engineer in the semiconductor manufacturing industry in Dallas, Texas and later launched an engineering consulting and systems integration business. Following the sale of that business in the late 80’s, he joined Setpoint in Houston, Texas where he eventually led that company’s Manufacturing Systems business. Aspen Technology acquired Setpoint in January 1996 and Mr. Green continued as Director of Business Development for the Information Management and Polymer Business Units.

In 2004, Mr. Green founded Advertas, a full-service marketing and public relations firm serving clients in energy and technology. In 2010, Geophysical Insights retained Advertas as their marketing firm. Dr. Tom Smith, President/CEO of Geophysical Insights, soon appointed Mr. Green as Director of Marketing and Business Development for Geophysical Insights, in which capacity he still serves today.

Hana Kabazi joined Geophysical Insights in October of 201, and is now one of our Product Managers for Paradise. Mrs. Kabazi has over 7 years of oil and gas experience, including 5 years and Halliburton – Landmark. During her time at Landmark she held positions as a consultant to many E&P companies, technical advisor to the QA organization, and as product manager of Subsurface Mapping in DecsionSpace. Mrs. Kabazi has a B.S. in Geology from the University of Texas Austin, and an M.S. in Geology from the University of Houston.

Carolan (Carrie) Laudon holds a PhD in geophysics from the University of Minnesota and a BS in geology from the University of Wisconsin Eau Claire. She has been Senior Geophysical Consultant with Geophysical Insights since 2017 working with Paradise®, their machine learning platform. Prior roles include Vice President of Consulting Services and Microseismic Technology for Global Geophysical Services and 17 years with Schlumberger in technical, management and sales, starting in Alaska and including Aberdeen, Scotland, Houston, TX, Denver, CO and Reading, England. She spent five years early in her career with ARCO Alaska as a seismic interpreter for the Central North Slope exploration team.