Deborah Sacrey, owner of Auburn Energy, presented at the the AAPG Deep Learning TIG for a free lunch and learn on Wednesday, 5 May 2021 at 12–1 p.m. (CT).

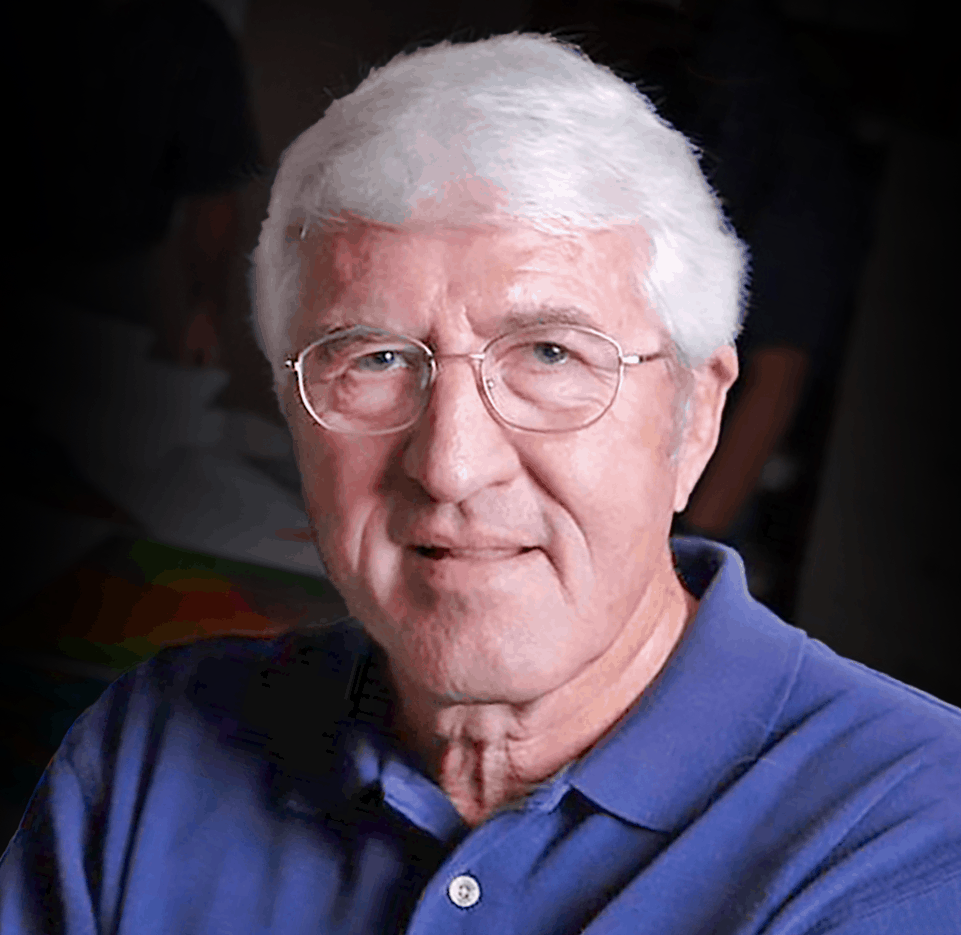

Deborah Sacrey

Owner – Auburn Energy

Deborah is a geologist/geophysicist with 44 years of oil and gas exploration experience in Texas, Louisiana Gulf Coast and Mid-Continent areas of the US. She received her degree in Geology from the University of Oklahoma in 1976 and immediately started working for Gulf Oil in their Oklahoma City offices.

She started her own company, Auburn Energy, in 1990 and built her first geophysical workstation using Kingdom software in 1996. She helped SMT/IHS for 18 years in developing and testing the Kingdom Software. She specializes in 2D and 3D interpretation for clients in the US and internationally. For the past nine years she has been part of a team to study and bring the power of multi-attribute neural analysis of seismic data to the geoscience public, guided by Dr. Tom Smith, founder of SMT. She has become an expert in the use of Paradise software and has seven discoveries for clients using multi-attribute neural analysis.

Deborah has been very active in the geological community. She is past national President of SIPES (Society of Independent Professional Earth Scientists), past President of the Division of Professional Affairs of AAPG (American Association of Petroleum Geologists), Past Treasurer of AAPG and Past President of the Houston Geological Society. She is also Past President of the Gulf Coast Association of Geological Societies and just ended a term as one of the GCAGS representatives on the AAPG Advisory Council. Deborah is also a DPA Certified Petroleum Geologist #4014 and DPA Certified Petroleum Geophysicist #2. She belongs to AAPG, SIPES, Houston Geological Society, South Texas Geological Society and the Oklahoma City Geological Society (OCGS).

Transcript

Susan Nash:

Hello everyone. I’m Susan Nash AAPG. Thrilled to be here today with our very first lunch and learn with our deep learning technical interest group and the topic today is machine learning: new discoveries in reservoir optimization. Deborah Sacrey of Auburn Energy is here and she has a more specific title for the paper but it’s really exciting and new discoveries and new possibilities. So I’d like to turn the floor over to Patrick Ing who will tell us a little bit about the technical interest group and introduce our speaker.

Patrick Ing:

Thank you Susan. Welcome everyone to this [crosstalk] or supper depending on where you are and deep learning group in AAPG is really a technical interest group. I’ll give you the link, you can contribute and keep the conversation going after this. Over the last 12 months at least, we’ve seen an emerging pattern of using ensemble techniques like random forest or custom techniques like support vector machine in automating lithology identification and also on the seismic side, we have seen the use of deep convolutional neural network for map interpretation.

Now what the challenge remains is when we work across the scales, mixing seismic and the [inaudible] resolution. So today, we’re in for a treat. Our speaker Deborah Sacrey is an experienced geologist and geophysicist. You can think of both the left and the right brain. In addition, she has hands on experience working software as well as actual field project experience. So therefore, today she’s going to dish up [inaudible] artificial nuance and show us how we can use it perhaps to uncover overlooked or new opportunities. So without further ado, I will turn control to Deborah.

Susan Nash:

Welcome Deborah and just quickly everyone put your questions in the chat box and we’ll answer them at the end. Welcome Deborah.

Deborah Sacrey:

Thank you Susan. I’m sharing my screen now can you see it okay?

Patrick Ing:

Yes.

Deborah Sacrey:

Okay and I probably will go dark on my camera, just so I won’t be a distraction to myself. Good day everyone. I would say morning and afternoon and evening because we’ve got people from over the place but what I’d like to discuss today is some work that I’ve done, case histories I’ve done on two different reservoir types, both unconventional and conventional and show how machine learning can help define sweet spots, help you calculate reserves and a lot of other technical things that are a lot of fun.

I’m going to start out with, let me get this going. Okay. I’m using some software called Paradise. Now, Paradise is a company that was started by Tom Smith, Dr. Tom Smith back in 2008 after he sold Kingdom and he was still not ready to retire so he started playing around with neural networks along with Terry Turner and the application of learning classification of neural networks using self-organized maps in multiple seismic attributes. So what we’re doing is we’re looking at multiple seismic attributes at one time and we’re looking at the variations in those attributes because they all contain information.

The premise behind paradise is instead of a wavelet processing or classification, say like Stratomagic used to do, we’re looking at everything on a sample basis. So sample statistics and if you have a wavelet that’s 20 or 30 milliseconds and you have one millisecond sample rate, then you’re parsing that data and looking at it statistically 20 or 30 times what you probably would do when you’re looking at a wavelet and mapping zero crossings or peaks or troughs. So this generates a lot of information but it also lets you see a lot of fine detail in the sub surface and one reason why we use multiple attributes like I showed earlier is that along each sample, each different attribute, the wavelet of that attribute carries a little bit different information in it and we put all of this stuff in a cloud and then have the neurons look for the natural clusters of data in that cloud and what happens because each of the data points have an XY and Z to them, when it comes out of the cloud as a cluster, it puts itself back in a volume very easy to interpret.

So what you end up seeing is you end up seeing voxel and I call them chicklets because they remind me of the candy gum we used to eat, and the voxel is going to be based on the sample interval that you’re using and the bin size of the data. Now, 99% of my work is in 3D but this process also works in 2D data if you’re in remote areas where you don’t have a lot of 3D information. Now, in conventional work, in the wavelet, we have what we call a tuning thickness and that’s based on frequency and depth and the quality of your seismic and in the sample world, when we’re doing classification of samples, we really don’t have that. Since we’re looking at sample rate, we’re looking at the vertical resolution of the sample statistics is based on the interval velocity of the rock itself from which the sample is taken. So there’s no interpolation and it’s based on what it’s seeing regardless of depth, regardless of frequency. So I’ve been able to find seven foot thick sands at 11,000 feet in the gulf coast. I’ve been able to see 18 foot porosity intervals in carbonates at 2100 feet. So it’s based on the velocity of the rock at the sample.

Now with that, I’ll get off my soap box about Paradise and get onto the work I’ve been doing. This work was actually done several years ago, TGS approached us to kind of a proof of concept, and wanted to see how well this classification process would work in an unconventional reservoir in Oklahoma. Most specifically, we’re looking at the Meramec formation in central Oklahoma across Blaine County and Kingfisher County. So they gave us a 195 square miles of data and the project objectives were to see if we could discriminate production in the formation and tell why some wells were better than others and understand the accuracy in the neural classification results.

So part of the assumptions and challenges to this was the production is not always related to only geological changes and because there was a combination of long laterals and straight holes, we decided we were only going to look at the straight hole data because of the variance that can happen between fracking and lateral length and things like that and to some degree the operators. So the only thing that’s static and good in here is going to be what happened with the straight hole data. That porosity and permeability could not be calculated from the log curves provided in order to calibrate the wells. Because we were using straight holes, most of the wells were drilled in the 80s and 90s. My newest well in there was a 2003 I think. So the log curves left a lot to be desired.

There was difficulty in isolating specific production in all the wells through the perforated zone because of the age of the wells and because of the nature of the rock itself, and some wells were shut in at times and not so they were hoping that we could find being able to isolate specific production in perforated zones. Again, the decision was made to use only straight holes because of variables in completions in the lateral wells.

So the first thing they did was add, this is 195 square miles. You can see the live trace outline right here and I know the other writing is a little bit small but they gave me 35 wells and they held five wells back as blind wells to see how well I could predict what was going on. So what I’ve done I’ve taken the cumulative information for production and the really poor wells are in green. The medium class wells and those are wells that have produced 50,000 barrels or greater, are in pink and then the very best well out of the 35 wells had accumulated 240 barrels of oil and 2.23 BCF of gas. This was my star child that I really wanted to look at and see if I could see what was going on in this well as opposed to all the other wells in this 195 square miles.

So the first thing I did is I mapped the actual top of the Meramec formation which is Mississippian in age. Again, this is in central Oklahoma in Blaine and Kingfisher counties and this is the structure map. Now what you have, is you’re getting deeper as you go to the southwest because you’re going into the fringes of the Anadarko Basin which is a very deep basin. My good well is right here the Effie Casady well. I have a North South cross section that I’ll be focusing upon and then I also built an East West cross section through the medium wells and poor wells.

So the data quality is excellent TGS did a wonderful job and I have to thank them again for allowing me to do this work but what I want to show here is the top of the, this is Pennsylvania the big line which in some areas they call the Osage, the Mississippian starts right about in here in this middle section, the top of the Meramec is this hot pink horizon right here and then you get into the base of the Woodford which is this, the Woodford is this trough and if you look, my color bar, I keep my troughs in black and my peaks in orange. I call it my Halloween color bar. I’ve used it for 30 years but this black trough right here is basically the Woodford section itself and the Woodford ends up being the source rock for the Meramec production.

So basically we’re looking at this interval between the pink and this yellow which is the base of the Woodford. That’s my horizon limits. One thing I want you to notice is that as you start on the North side of the survey and look at the South side, you can see an expanding section. So the Meramec is getting thicker and the Woodford is getting thicker. So that should be one clue as to why my really good well down here might have produced a little bit more than the wells to the North which were generally poorer wells.

Now, one of the things I go into is I look at principal component analysis. I generate about 17 or 18 attributes, all instantaneous attributes at first. In this case I did not have any ABO data, I didn’t have any angle stacks or offset stacks. I only had a PST in volumes and then they gave me a series of inversion loggings. I probably won’t talk much about the inversion because they didn’t work well but my opinion about inversion is that it’s good when you’ve got a lot of well control but it’s a modeling process in and of itself so when you get very far away from your well control, the model starts to fall apart as opposed to classification or SOM which is one neuron equals the same rock properties wherever you see it in the data.

I went through principal component analysis and looked at all 16 of my instantaneous attributes and the first item vector, it started breaking out those attributes which were more pertinent to successful classification in the volume than some of the other attributes. So right off the bat I see five attributes that contained about 70% of the data that all 16 would have. In looking at the item value numbers, you can see a lot of variance in the data set. The way Paradise works, it does this principal component analysis on every inline in the data set and the green line represents the median for the whole data set but you can pick any one inline and look at the values and the appropriate attributes that show up in the top of the item vector anywhere you want or do a group across a field.

So I used a recipe looking at first the top attributes in the first two item vectors. So in this case I’ve got attenuation, envelope, Hilbert, instantaneous frequency, instantaneous phase, normalized amplitude, relative acoustic impedance, sweetness, and thin bed. What I notice right off the bat is, here’s my really good well, this is the 240,000 barrel well right here, is unlike a lot of the other wells in the area where they had just perforated the whole Meramec section or the majority of the Meramec section, the operator on this well perforated a bulk of it and then did spot perforations in two other areas. I got to looking at the area down here that had unique neurons or classification clusters that were not apparent at the base of any other well or very many wells in this section. So here’s neuron number 71, which is kind of brownish in color and neuron number 72 which is yellow and you can see the yellow and the brown over here occur just mainly around the Effie Casady well and a couple of other areas but not very consistent in the rest of the section.

So what I’m able to do is I am able to go in and kind of squeeze down and look at these and when I pulled up the appropriate log curves for the Effie Casady well, I noticed that the two sets of perforations right here and right here, corresponded to better resistivity in that well and of course sometimes a better resistivity in unconventional play in the rock that’s more brittle and can be fracked more easily or it might contain a sandier section with the higher carbon, a higher TOC. So I was especially interested in this lower zone again, where I had neurons number 71 and 72 and it had the highest resistivity of anything else in the well in the Meramec section.

So I can isolate those two neurons, I can turn everything else off and isolate just those two neurons and where they occur in this whole 195 square mile area. We can do that because we can take it, the upper horizon and the lower horizon and we can squeeze it down to eliminate spurious information and just concentrate on our zone of interest down here which are these two lower sections of perforations, what I call the sculpted interval. When you do look at that, you’ll start noticing that the Effie Casady is right here, this area were my medium wells and everything else, if we go back to the production map, everything else was in the poor category. So that tells me right off the bat that these two neurons are probably key to not only porosity and permeability but also to production in this whole area for the Meramec.

Now, one thing we can do is we can take the voxels because we know that there is a sample height, in this case one millisecond and the area, the bin spacing of the 3D and we can turn those voxels into actual little volumes and this is a process that we go through to create Geobodies. Once you have your Geobodies created, then if you know very specific information, you can turn around and turn those Geobodies and look at them in terms of reserves. So that’s what I did in this process. This is the Geobody that corresponds to that lower zone of perforations and the assumption I’m making here is that is where the predominant amount of hydrocarbons came from because it’s the highest resistivity, it was a very specific zone that they perforated and it’s abundant around the Effie Casady well.

So if you know, I have the sample counts, interior and exterior, if you know the velocity of the rock, you know the net to gross ratio of your zone of interest in that Geobody, you know your porosity and you have your water saturation, then you can calculate how many hydrocarbon core volume in cubic feet. Now we can do this in acre feet as well, would be attached to that particular Geobody. What I did is I have a buddy who worked for a larger independent who was in charge of the Meramec group in Oklahoma and I just asked him I was like, “What are you engineers using for numbers in this area for their reserve instruments?” He came back with 14,000 feet per second for velocity, he said generally they’re using a net to gross ratio of 60%, we’re looking at six percent porosity for some of the better wells and we’re looking at a 40% water saturation.

So when you take all those numbers and he also told me that they’re getting roughly about 250 barrels of oil per acre foot, then I can go in and I can look at that number and compare it to the actual produced number in the well. So I did that, divided it by 43,560 and I got 2725 acre/feet for this green Geobody and I multiplied it by the 225, I said 250 earlier but it’s 225 BOE/acre foot and I came up with a number of 613,125 BOE. So what I did is I took the oil production and the gas production in the Effie Casady and I converted the gas to a barrel of oil equivalent and what the well has actually produced is 611,685 as opposed to the calculated amount. Now this well is still producing, it hasn’t been plugged and it was drilled in 1980. So it’s been producing 50 years which is a long time, 40 years, 41 years and still has some production left in it. Of course you never get everything out of the rock but if you look at the number of actual compared to what I calculated, I’m only basically one percent off in error. So that’s pretty good, I would challenge most engineers to get that close when it comes to estimating reserves.

So I came back and had a meeting with TGS and showed them my results and they said well, I mean you could have gotten lucky by estimating the reserves for the Effie Casady. What about the group up here that were the medium group and it turns out, here’s the tree or four wells that are actually in the medium range, their cumulative in barrels of oil equivalent values. So the cumulative total for those wells is 581,883 barrels of oil equivalent. So I went back in and I looked at them and three of the four wells actually penetrated the 71 neuron but this last well over here didn’t perforate in that neuron, it perforated in a zone up here just above the Meramec. If you look at the blowup of the neural information, you can see that the number 72, the brown neuron right here, they perforated in here but this well did not and they didn’t have any of the yellow number 71 in here like the Effie Casady did which probably gave it so much better production.

In looking at them, here’s the 72 neuron here in this well and here in this well, and this well had it but it didn’t perforate it. What’s interesting is the zone of perforations in the one well and the three zones above the Meramec also perforated in 71 and 72. So the 71 and 72 neurons are indicators of better resistivity and higher carbons. Totally unrelated to what we’re seeing down here in a different complete section but the same neural information.

So I went back and I looked at the Geobody associated with that neuron and this well right here did not produce from that neuron in the zone of interest but this well right here, the Clydena did. So I took out the production from this well, I took the production from these three wells and this well and ended up with 481,681 BOE equivalent as opposed to the 475 that they’d actually dug and then added the Clydena to it and so the bottom line here is the four wells which did produce from that Geobody, was less than a 2% error to what the actual production was and what was calculated. So again it looks a little dendritic but if you’re looking for a sandy zone in an unconventional reservoir or you’re looking for porosity streaks, you would expect it to look something like this and not just a big clean reservoir.

So after that TGS was happy with the work and I think that they actually had Paradise up in the cloud along with their data. So if you’re a data subscriber to TGS Data, you have access to be able to use Paradise to do your work with their seismic data. Now a follow up on that, Paradise has the AASPI Consortia Software in it and that’s Kurt Marfurt out of the University of Oklahoma. We license that software to run geometric volumes in and so this is an example of a time slice roughly at the base of the Woodford showing little channels and stuff in the Woodford shale itself that are coming through in the similarity volume and I can also look at fractures. This is 17 milliseconds above the base of Woodford roughly about the zone of the lower perforations in the Effie Casady well.

So I can definitely see some fracture trends and I think there are some busts in processing right in here that TGS was not aware of but this software is pattern recognition. So if there’s a pattern in the data, it’s going to pick up on it and the unintended consequences sometimes are picking up acquisition footprint, bad seams in merges, processing busts, there are all sorts of patterns up there coherent and incoherent patterns that you can pick up with neurons other than just geology but there are some definite fracture trends in here that you can see going on throughout the Meramec. I ran a SOM using two curvature volumes and two similarity volumes so that I could turn on and turn off clusters and it turns out that the vast majority of fractures and lineaments in here can be seen just with four neurons. So I thought that was pretty interesting.

So the conclusions from the study is that SOM can be very effective at finding sweet spots even in unconventional reservoirs. Now I find them all the time in conventional reservoirs and we’re getting ready to get into that. Especially where there are changes in deposition, siltstones, calcareous sands, et cetera and I’d even used this process in West Texas in the bone springs and the Woodbine, not the Woodbine, Wolfcamp to find little thin calcareous sand channels and stuff even in those environments. Geobodies can and are related to finding porosity streaks and can be back calculated to production for use in reserve estimates and it’s very accurate. It’s a good way of going forward to estimate new sweet spots which have not been drilled.

The key to all of this is understanding that the depositional environments, I mean applying the geology to what you’re seeing in the seismic data, tying to wells with synthetics because here, unlike a wavelet where you can kind of be loosey goosey with your synthetic, you’re tying the very specific neurons that are sample based. So you need to know with good synthetics what you’re trying to and then also understanding the function of attributes that you’re using in your analysis. Some of them are good for[inaudible] some of them are good for porosity, some of them are good for hotter carbon indicators. So it’s the combination of attributes that you use in your, what I call recipe, that are going to get you further down the road in understanding what’s going on in the subsurface.

So next, I’m looking at exploring in a conventional play in East Texas and the premise behind this study which was more recent than the Meramec study is I had a client who operates a field and actually ends up owning a lot of the minerals in a very good East Texas field which was discovered back in the 90s and there have been a lot of good wells, a lot of production out of this field. This is another proof of concept for them to see if unsupervised classification on a sample scale could help identify reservoir rock in Cretaceous sands below 13,500 feet. So in the Meramec world, we were dealing with rocks around the 8500 to 9000 foot range and now we’re getting deep, we’re in the 13,500 to actually 14,300 range across this 3D and to see if there were any remaining locations left to be drilled. They thought they’d gotten it all but there’s always hope that there were one or two spots that they left behind. Again, they gave me a 3D survey, PSTM only, I did not have gathers, I did not have any outstanding AVO volumes or angle stacks, they gave me wells, production, tops and digital logs.

So this first map you’re going to see is a map on the key horizon just above the productive Cretaceous sand and this map is based on the unconformable surface below, and I can tell you it’s below the Austin Chalk. The base of the Austin Chalk is an unconformable surface and this Cretaceous sand is coming up and butting against the unconformity. I have two cross sections I’ve built in here again. A West to East cross section and a South to North cross section. Now in this case, the wells with red squares around them are those wells in which I’ve created synthetics and there is a very good velocity variance across this 3D as you go from South to North where the section is thickening again and you’re getting deeper and from West to East even and there’s a big fault over here that you can cross over and there’s a big velocity change across that fault. I’ve turned off all the shallow productions because there is shallow production in here. So everything that’s shown is 12,000 feet deep or greater.

Now the South to North arbitrary line is what you’re looking at right here and this is the unconformable surface that we’re going through in this area and the sands, it’s probably a little bit harder to see here but generally what is happening is these Cretaceous sands are coming up unconformably and hitting the horizon and they keep coming up. So sometimes across the area you may see five or six different sands and they’re not the same. They have different rock qualities but the production in here is from very poor production to some medium good production, this is a 24 BCF well that made over almost 1.3 million barrels of oil and you can see the perforations in here.

Now, the West East, the sand is a little bit harder to map. It’s a little bit more spurious in its occurrence but the premise is still that this is an angular unconformity and they’re coming up in different zones and butting up against it. This actually goes through my very best well in here which made 33 BCF and almost 1.8 million barrels of oil. So there’s a 26 BCF well and then you get over here to some poor wells that are even structurally a dip from good wells which you’ll see the difference in the rock quality when we get into the SOM.

Now I did the same process here. I took all of my instantaneous attributes that I created from the PSTM volume and I went in to look at principal component analysis. So right off the bat, the top five in the first icon vector happen to be relative acoustic impedance which to me is a porosity indicator. Envelope, Hilbert, envelope second derivative, and then sweetness which I use consistently in the gulf coast as a hydrocarbon indicator. So we’re getting the top five group right here which is again, over 60% of the relevant information from all the rest of the attributes used in the analysis.

This is the South to North after processing and here’s my recipe. So I’m using thin bed because some of these sands are very thin sands. Relative acoustic impedance, envelope, attenuation I threw attenuation in there because there’s a lot of gas associated with this and I wanted to see the effects of the gas in the SOM. So right off the bat, I’ve started looking at the perforations and identifying those neurons which are repeated over and over again in some of the better wells and here you can see the combination of number 37, 47, and 48. Now, these are just clusters. I haven’t ascribed any rock properties specifically to them. I’m just looking at the way that they accumulate or assimilate next to the production in the wells and I have the production for each well in here. So in number 37 which is this darker blue, number 47, 48 which are these kind of teal colored neurons right here. Again, 37, 38 which are blue.

So there are some neurons that in the better wells, 12 BCF, 8 BCF, you don’t see them so much in the 1 BCF but again, in the seven, that keep showing up over and over again and then when I look at my West East, here again, I’ve got some wells that are poor that are structurally higher than some wells that are very good. Now for the first time, you start seeing this yellow neuron come in and here is the very best well in the field, the 33 BCF well again. This well, after talking to the operator because I was concerned that I have all this yellow and he said they had a 300 foot thick sand, it was the most beautiful sand they’d ever seen, permeability and porosity just gorgeous. It was just down thrown across the fault with the water level in it. So the fact that this yellow neuron is associated with the best well and one of the poorer wells, has only to do with structure. It has nothing to do with the quality of the neuron itself which I believe in here without having angle stacks is not showing me fluid level as much as it’s showing rock quality level.

Now what I’ve done and this is something that’s fairly recent in paradise is to be able to build cross sections and actually extract some information or neural information along the cross section. So now we can start, and again it’s valid only if you have really good synthetics and you know what you’re tied into. So now in the South to North, I have kind of a grading system for the wells, poor being zero to five BCF, fair being five to 10, good being 10 to 20, great and then excellent is anything greater than 25 BCF. I can start associating very specific neurons with wells in their category. 55 and 37 with the well that made 24 BCF. Six, 17, 18, 27, and 48 with a well that was in the medium or good range. So I can start quantifying the neurons with the production in these wells and I did this on the South to North cross section and again my zone of perforation is right here and in my West East and again here is my really good well and the well that was down thrown, I don’t have a log on that well, down thrown and he’s saying that it’s a really thick sand, I’m assuming that 73 is covering that sand but only perforated in here because they hit the water level.

So I can start to get a feel really quickly which neurons are good and which ones are medium and which ones are associated with poor production and what I’ve done here is I have much like in the Meramec, is I’ve pulled up all the neurons within that zone of interest and I think I went from the unconformity down 30 or 40 milliseconds because the bulk of the production is within 30 or 40 milliseconds of the unconformity surface itself. What you start to see with the poor, the fair, the good, the great, and the excellent is you start to see some of the better neurons associated with the East side of the field and some of the poorer neurons with the West side of the field and they breakout interestingly enough, in clusters that are pretty much at polar opposites in the whole map. So what happens is, clusters that are close together have very similar properties but just enough to distinguish themselves in separate clusters. So this group is very similar to each other but in four separate clusters. So this is the group of poor neurons associated with poor production and these are the neurons that are associated with the good, the better production and excellent production.

I can look at each layer independently and then look at the associated attributes that go with that layer and their contribution to that cluster. So in this case, the yellow layer which is with the best well and here’s my best well right here and you can see the cross section lines coming through, is Hilbert relative acoustic impedance which again, I’m using as porosity indicator, envelope and sweetness which can be hydrocarbon indicators and then everything else is not contributing that much. So here is neuron number 73, here’s neuron number 55, and again, the associated neuron independence that goes with the attributes there and then finally here’s neuron number 37 and you see attenuation starting to come in along with relative acoustic impedance. So you’re starting to see some of the gas effect coming in, in this neuron and here’s the best well.

Now, similar to what I did with the Meramec is in Paradise we have the ability to look at data based on the probability of those data points being in the center of the cluster. So each cluster has a scattering of data pints that make up that cluster. What I’ve discovered over the years is those data points which are on a peripheral edges of the cluster yet still being a part of that cluster are the most anomalous to that cluster. In the gulf coast, if I have had angle stacks or offset stacks or I have gradient intercept, I have AVO volumes that I’m using in my analysis, I have found that the AVO effects are most concentrated in these outside clusters. Excuse me one second I’m going to get a drink.

So what I want to do is I want to turn off all the data points of the clusters and only look at the outer 10% of information which is my most anomalous information to this area. When I do that, you see a lot of the data goes away. Now, this 3D was shot well after the field had been discovered and was being developed. So let me go back to, excuse me. Let me go back to, here’s all my clusters with all the data points turned on. Now, when I only look at the most anomalous 10%, what I think I’m seeing here is I’m seeing signs of depletion in the field. There’s nothing anomalous about these areas anymore because they’re all depleted. However, there are four zones around the edges of the field which have not been depleted. Now I killed this one because it has a dry hole in it and if you peel off the layers, it has a lot of the bad neurons in it but this little area right here, this well right here, deviated well is, the base of the well is right here. So that production sign is not pertinent to this zone and two zones that are down here that have substantial size to them and you can see the green and the yellow and the blue that’s associated with what we saw in the best well.

Now, this area right here is downthrow or lower so it may carry a little higher risk with it but for right now, these are my four zones that I’m seeing that are left after depletion. So I created Geobodies with them and I want to go in and look at the Geobody information now but before I do that, I want to prove to myself that what I think is a good neuron for production in here is actually so.

So here’s one of them I’m looking at and just off the cuff, if you look at the ariel extent of the Geobody and the neurons in it, you’re coming up with numbers that if you were assuming a velocity of 14,000 feet per second, and a net to gross ratio of 80% in here and a 25% water saturation and a 30% porosity, then you can come up with this area right here which is dry holes on one side and oil producing well on the other but it’s not depleted, then you can put 20 BCF in 1.1 million barrels of oil in that blob.

Here, I’ve taken 15% of the yellow because I can’t run a Geobody on low probability in formation but I’m still coming up with 13 and a half BCF and 727,000 barrels of oil and the two areas to the North are a little bit smaller, they’re in the good category, 8.6 BCF and 460 plus thousand barrels of oil and finally 5.5 and 290,000 barrels of oil. Now that’s my estimate based on the fact that I think that these Geobodies are related to neurons which are related to reservoir quality. These are 6.2 million dollar wells so they’re not for the faint of heart to drill for my client but he said okay that’s good and well but how do you prove that those are good hydrocarbon neurons?

So one of the ways we have of going about looking at it is to look at bi-variant statistics and we’re going to go back to the cross sections now and the neurons that were associated with the wells. So I can put wells in and I can till up the zone of interest I wanted to use and I can pull up the associated SOM classification volume that I ran and then I can give it some very specific petrophysical cutoff information. In this case, I’m looking for gamma values less than 60, I’m looking at 10% porosity or greater, I’m looking six ohms or greater of resistivity when it comes to what determines what’s wet and what’s not. Then in the kind of square table, I can actually, if I had multiple runs with multiple topologies, this happened to be an eight by eight, then I could go in and it would tell me which topology it thought was the best for the analysis.

What happens is, and when you give it the cutoffs and you give it the zone of interest to look at, it’s going to look at all the neurons in that zone of interest and it’s going to determine which ones are really related to reservoir quality or reservoir and which ones are not. So in this example, this good well right here which produced 12.6 BCF and 175,000 barrels of oil, it’s looking at neuron number 38 and neuron number 41 which happen to be the two neurons associated with the production and said yes, those are reservoir rocks. Now neuron 63 which is up in here, has some reservoir in it but it’s mainly non reservoir and then the kind of square will do a known hypothesis theory and will tell me it’s rejected or accepted. In this case the known hypothesis is rejected which means that the alternate hypothesis is that there is a relationship between net reservoir and lithological contrasts on variables.

For most people in the past few years, they have looked at the SOM work that we’ve been doing or I’ve been doing and to them it’s a black box because they keep thinking, they keep trying to go back to rock properties. Well here is where you can take the black box out of it and associate specific clusters of information with reservoir quality rock. So that’s a case for the good well. Here is a poor well which only made 165 million cubic feet of gas and 2800 barrels of oil. Well I looked at the perforations across that reservoir, used the same basic cutoffs and here I’m looking for greater than eight ohms of resistivity and eight percent porosity and it’s telling me that it’s got some reservoir in those two neurons but it’s telling me most of it is not reservoir and based on the poor production and the really tight sands or ratty shaley sands in here, I can see why I don’t have a lot of good reservoir and why the well-produced poorly. Now it’s still saying that there is a relationship between the net reservoir and lithological SOM contrast but it’s just telling me I have more non reservoir than I have reservoir and again, it’s held up by the poor production of the well.

Now for the final well that I looked at was my best well in number 73, 35, and 55 and in here, it’s telling me that 55 and 73 are definitely reservoirs but there’s a little bit of non reservoir 73 and if you look over here at the perforations, you see this area that’s a little bit wetter and a little bit shaley-er so it’s picking up on just that piece of reservoir which is non reservoir and letting me know that there’s some non reservoir in there. Again, it’s rejected the known hypothesis and telling me that there is a relationship between net reservoir and lithological contrast SOM variables. So here’s a way I’ve been able to go in and evaluate a field and look for pieces that were left over and verify that the Geobodies left in these areas can contain if one was to go in there and drill it, an amount all four areas is equal to somewhere over 55 BCF and close to four million barrels of reserves left in this area. You just have to want to get up and spend 24 million dollars coming in here and drilling these areas.

So the summary and conclusion on this is that I can go in here using multiple seismic attributes and show patterns in the Earth that you can relate to reservoirs when calibrating to wells, that the use of Geobodies again, can predict accurate volumes of potential hydrocarbons when you have the correct input data and that here, the use of low probability can help identify stranded reserves in a field and I’ve done this in other fields I just don’t have the show rights to be able to show you guys and the application of statistical petrophysical methods can verify and reduce risk in identifying reservoir-grade rock in the potential stranded areas. Now this workflow is not one and done, I don’t stop at one recipe. I’m constantly playing with it and looking at different topologies, the number of clusters I’m using to identify but it is a very good statistical methodology to go in and look for stranded reserves.

So that kind of is the end of the unconventional and the conventional case. The last thing I want to show is some of our latest work that we’re doing in the SOM work and that has to do, and I want to thank TGS and my Texas client and a big thank you to Carrie Laudon of Geophysical Insights for guiding me through the statistical analysis process because when I did this last year on this East Texas field, I was clueless as to how the bi-variant statistics process worked.

This last stuff is some stuff I’ve been doing in northern Columbia and we’re getting into convolutional neural networks now and associated with SOM and working with the AASPI software that we have in Paradise to look at how we can go through and eliminate the need for manual picking of falls which is the most dreaded thing a Geoscientist has to do when they get a big survey. This survey happens to be about 400 square kilometers in northwestern Columbia and you can see it’s very complicated when it comes to the fault regime. Now what we’ve done is we’ve taken a two millisecond sample volume and we have created a neural network of fault basically faults in here but we’ve upsampled the formula second to run it through our convolutional neural network. This gets rid of unwanted noise and possible false positives. Then we can go into the AASPI software and take the CNN fault volume and skeletonize it down to just the basics, getting rid of false positives again noise and getting it down to what looks like a valid fault interpretation volume in this area.

So I bring this into paradise as an attribute volume and I can pull it up in the 3D viewer and look at what I believe would be the fault planes as opposed to the fault background and some of these other areas, the white areas. Then I want to use it in SOM in the classification. So I will use it in combination with instantaneous attributes and get something that looks like this. So now I can see my fault planes and the faults clearly associate themselves in one corner of the 2D map that we have in 3D viewer and I can go in and then turn on the instantaneous attributes that I believe would be associated with the reservoir rock and I’m going to focus on this one well right here because this is a well that was recently drilled by the client and came on pretty well for gas but then watered out. So they’re wanting to understand an explanation of why it might have watered out and I can see stratigraphy along with the fault web itself.

So here’s a closeup of that well and it’s very close to the fault and what I think happened is, I can see the different layers of the perforations, they perforated three different zones in here and it was the upper zone that watered out at the very, very end and not only do I think that they probably coned water up to the fault plane, but I think that the upper reservoir which finally depleted, either depleted on test or it was compartmentalized to where it wasn’t very big in association with the water they were getting up the fault. Now here’s the reservoir neurons and the fault neurons, and here’s another look at it. So they can come updip and get past this compartmentalization right here but then the question is, does this fault go all the way through and this is an upper structure right here. So maybe the gas has migrated, you’ve got some low saturation gas in here which could be contributing to the watering out and maybe the good gas is all migrated up around this fault into this upper structure right here. So here’s my reservoir neurons, here’s my fault neurons. If i had this in the 3D viewer right now I could rotate this around and you would be able to see how beautifully these fault planes are coming out with that skeletonized volume in SOM.

So with that guys, I say that’s the end of my presentation. If Susan is ready to take questions and send questions my way I’ll do my best to be able to answer them.

Susan Nash:

Great thank you. So why don’t we take a look at some of the questions. Let’s see I didn’t take, so

Deborah Sacrey:

I’m going to get out of this Susan real quick so that I can go back to any one slide if people kept notice on the numbers of slides that they have a question to a particular number of slide.

Susan Nash:

Okay. Great and I don’t know about a PDF of the slide presentation being available but you will have the recording available and here’s a question, is the vertical window of neurons completely random or can you give it a certain minimum thickness?

Deborah Sacrey:

No, in fact a lot of times what I do you can go above or below a horizon in milliseconds if you want or depth, all this is generated in depth volumes as well as time volume but a lot of times what I will do is I’ll do a window between two specific horizons and basically the only interpretation I do in the conventional models anymore is major flooding surfaces because I use those as my horizon limiters but no you can do it by time, you can do it by horizon, you can do it just about any way you want.

Susan Nash:

Great. Here’s a question from James Hill, will this technique work on 100% 2D data?

Deborah Sacrey:

Absolutely. Now, getting into some of the geometric volumes, you know that the geometric volumes are multi-traced calculations. So generating a similarity or a coherency volume or curvature volume is always a little bit iffy when you have 2D data because you’re looking at one line of trace instead of multi-traces. That’s not to say that you can’t do it but certainly the instantaneous volumes, I’ve got a prospect in South Texas right now that I only have five 2D lines on. So we’re hoping to get enough interest to shoot a 3D image before we drill a well.

Susan Nash:

Another question from Kimberly Wagner. What kind of well density is needed in order to get reasonable or reliable results with this sort of analysis?

Deborah Sacrey:

Well you can do this without wells. I have down offshore West Africa for a client without any well control at all. You run a little bit higher risk in doing that but if you can start seeing flat spots, if you can see distributary sand systems, you see patterns that are geological in nature, you don’t have to have well control at all. You have to have good concept and geology and understanding the attributes that you’re using to be able to pull out information. You definitely can see flat spots if you’re using fluid type attributes AVO volumes.

Susan Nash:

Great. So Valentina Bartinova is asking are SOM and PCA considered deep learning?

Deborah Sacrey:

In my mind they’re deep learning. They’ve been around for a long time, people might think that the convolutional neural networks are probably deeper learning than SOM but I’ve been using this process for about 10 years now. I’ve probably worked on between 150 and 200 different surveys around the world and the classification process for understanding the subsurface stratigraphy and what mother nature has given us to work with is so superior to anything else you can do in the conventional seismic world, it just, I can’t ever go back to conventional wavelet data again. Every time I look at wavelet data, my mind is trying to imagine the subtleties of the clusters that are going on in the background.

Susan Nash:

That’s interesting. Patrick Ing comments that think of them as tools in a toolbox. PCA is one of the tools in data science. SOM is one of the earlier developments in clustering algorithms. So I don’t know if you have a thought about that? Okay. Has anyone used this type of analysis for Geosteering horizontal wells? This is from David Grumbo.

Deborah Sacrey:

So David, we have several clients that are in the unconventional world and I can tell you that based on the detail they can see especially in Eagleford when we can zone in on the sweet spot on the Eagleford, they definitely are using it in Geosteering.

Susan Nash:

Interesting. So Bob Kirkland asks, is Kingdom the best software for doing all of this?

Deborah Sacrey:

Well now Kingdom is not part of Paradise. I happen to use Kingdom, I’m an independent, Kingdom is a lot cheaper than Patrel for me out here in the country but the only thing I do in Kingdom is I bring the volumes in, I up-sample them because we found that you can re-sample the data one time like going from four milliseconds to two or two milliseconds to one and not introduce a lot of artifacts but you get better statistical information and better resolution. So I use Kingdom for some functionality but all this work is done in a completely separate program that we call Paradise.

Susan Nash:

Thank you that was a good differentiation. So James Hill comments, it seems that your estimated production in the unconventional Casady well suggests that the majority of the reserves has been produced. Would you recommend a refrack in this well?

Deborah Sacrey:

Well I mean what you’re not seeing on that map is all the horizontal wells have been drilled in the Meramec since that well was drilled in 1980. So we’re looking at a well that probably has very little life left in it and any life that’s left in it is probably being consumed by those wells around it which were lateral wells not shown on the map.

Susan Nash:

Interesting. That’s good to know. Trevor Richards, this is probably our last one asks, have you seen any effects that you might link to production effects? It seems that this could be a powerful tool for characterizing stimulation areas [crosstalk] production effects in mature field areas.

Deborah Sacrey:

Absolutely. I mean again, just because of the accuracy in the Geobodies that I’ve been able to work with, I would probably put this up against any reservoir simulation model, not only from the detail that you can see in SOM at one millisecond resolution but from the accuracy when you have a known amounts of porosity and permeability and velocity and stuff that you can get. Not that I want to become an engineer in my later life, it’s bad enough to go from a geologist to a geophysicist but I can take this tool and I can go full circle for my clients with some reliability.

Susan Nash:

Well that’s great. So we’ve come to the end of the hour and I’d like to thank you Deborah and also I’d like to thank our sponsor Geophysical Insights and really happy that they’ve sponsored this lunch and learn and I appreciate their generosity and also just like to remind everyone that you will be getting an email with the link the recording and also would like to ask you if you are interested in any other lunch and learn topics. I’d like to say thank you to Patrick Ing, and let him have a chance to say a few last words.

Patrick Ing:

Certainly Susan and Deborah really enjoyed your neurons presentation. One comment is that what you’re showing us is perhaps, like many over decades we’ve seen geologists, they’re very good at looking at things that we don’t see but this biological neurons. So what you’re showing us today is the use of these artificial neurons and combining the two you think will be better leading the arts of getting these successful maybe we develop these assets and looking for new ones.

Deborah Sacrey:

Yeah well Patrick one last thing I’d like to say is a lot of people think that machine learning is going to get rid of interpreters, I say we’re going to need more interpreters and interpreters with experience because you still have to interpret the results of this and combine it with well information and understanding of geology to make sure that you’re on the right track and that you’re accurate. So machine learning can make life easier in terms of fault picking but we’re still going to be needed to understand everything that it’s doing.

Patrick Ing:

Thank you Deborah, thank you Susan.

Susan Nash:

Thank you well thank you everyone and thank you again to Deborah and Patrick and we will see you on the next one.

Deborah Sacrey:

Excellent. Thank you.

Patrick Ing:

Bye bye.